As the name suggests, the Service Bus establishes a

platform-agnostic messaging infrastructure. It essentially provides a

foundation of connectivity fabric that supports a variety of

inter-service messaging frameworks and patterns.

Connectivity Fabric

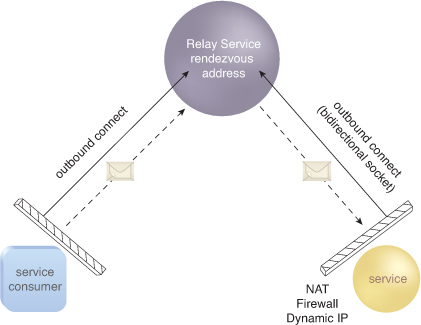

The Service Bus acts as a

rendezvous destination on the Internet where services and their

consumers can both meet and interact. As shown in Figure 1,

a service first connects to the Service Bus through an outbound port

(or by projecting itself onto the Service Bus), and then establishes a

bi-directional socket connection (via TCP or HTTP). This connection

session is published on the Service Bus, thereby making it a

discoverable service endpoint at a particular rendezvous address.

A service consumer does the

same by establishing a connection through an outbound port to interact

with the service behind the rendezvous address. Once these network

connections are established, the Service Bus can act as an intermediary

and relay messages between the service and the consumer program. The

service consumer is unaware of the service’s actual location; it only

needs to know about the rendezvous address that was published and made

discoverable on the Service Bus.

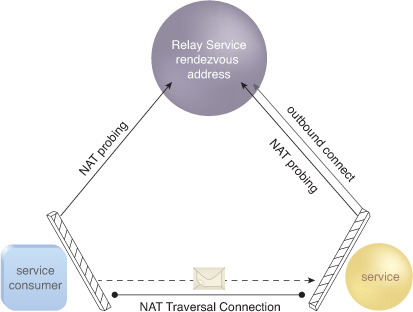

The Service Bus also

provides a hybrid connectivity mode, which enables a direct,

peer-to-peer connection between the services and consumers. In this

case, the Service Bus acts as a match-maker by using a mutual port

prediction algorithm based on probing information from any services and

consumer programs currently connected to the Service Bus service.

If a direct connection is

possible, the Service Bus provides the logistics to both the services

and consumers, which then attempt to establish the connections and

bypass the Service Bus for cross-service interactions. Once these

programs are communicating directly, the Service Bus is no longer

involved. Direct connections can benefit from improved performance and

throughput, and are facilitated transparently (Figure 2).

With

the outbound-rendezvous relayed connectivity model, services don’t need

to be concerned with responding to inbound connections and making

specific changes to an on-premise environment as long as the Service Bus

is accessible from the service. This connectivity model can also be

found in other architectures, such as instant messaging services,

voice-over-IP systems, and peer-to-peer file sharing services.

Direct connectivity can be used to support many inter-service messaging patterns for interactions, including:

bi-directional messaging

request-response

one-way unicast

multicast messaging

publish-subscribe

asynchronous messaging

buffered delivery

Message Buffers

The relayed and

hybrid connectivity models leverage WCF relay binding. If either the

consumer or the service is not using WCF then all communication will

need to be routed through the Service Bus itself. In this case, the

Service Bus offers message buffers that provide persistent, asynchronous

messaging and support open interoperability with any program capable of

making REST calls over HTTP(S).

Service Bus message

buffers provide a data structure with first-in, first-out (FIFO)

semantics. Messages are held in a buffer until the intended message

subscriber pulls them down. The message buffer allows a service (acting

as the service producer) to periodically connect to the Service Bus and

asynchronously poll for incoming messages through simple HTTP(S)

requests (as opposed to persistent TCP connections). Similarly, a

service consumer can asynchronously publish messages via HTTP(S) to the

rendezvous address within the Service Bus namespace, and then return to

poll for any expected response messages.

The subscribing service

controls the message buffer’s lifetime and when a message buffer

expires, it essentially disappears. When a subscribing service creates a

new message buffer, it uses a message buffer policy to specify how long

the buffer can live after the

last time it is polled for messages. This time can span from 1 to 10

minutes. Every time the service polls the buffer, the timer is reset,

but once it counts down to 0, the Service Bus essentially deletes the

buffer and everything in it (regardless of whether there are active

message publishers still pushing messages to the buffer).

Its limited lifespan is a

characteristic that distinguishes a message buffer from a message queue.

Another distinction pertains to durability. If a non-empty queue node

were to crash, any messages within it will still be available after the

node is brought back up. Service Bus message buffers have no such

guarantees. If a node goes down, all message data is lost, although the

buffer’s metadata will remain. When the message buffer is brought back

online it will be empty.

It can be helpful to view a

message buffer as a short-term cache between endpoints or even between a

cloud-based projection of an application’s (receiver’s) TCP buffer.

Message buffers have specific usage quotas, as listed in Table 1.

Table 1. Usage quotas for message buffers.

| Quota Name | Quota Value |

|---|

| number of simultaneous connections (senders) open per service namespace | 100 |

| number of simultaneous listeners per service namespace | 25 |

| number of operations per second (does not apply to NetTcp bindings) | 10000 |

| number of message buffers per solution namespace | 1000 |

| maximum message (in a message buffer) size | 64KB |

| total capacity of a single message buffer | 3MB |

Service Registry

The Service Bus provides a

service registry for publishing and discovering service endpoint

references using a structured naming system. It exposes a namespace’s

endpoints through a linked tree of Atom feeds. Service consumers can

browse the registry by issuing HTTP GET requests to base addresses. The

service endpoints published in the service registry use a global forest

of hierarchical namespaces that are DNS and transport independent.

The Service Bus names are projected onto URIs in the following format:

<serviceNamespace>.servicebus.windows.net/<name>/<name>/...

At its core, the

Service Bus namespace is a federated, hierarchical service registry with

a structure dictated and owned by “the project.” The difference between

the Service Bus namespace and a “classic” service registry system (such

as DNS or UDDI or LDAP) is that services or messaging primitives are

generally not only referenced by the registry. Instead, they are

projected straight into the registry so that your consumers can interact

with the registry (and those services or messaging primitives projected

into the registry) using similar or identical programming interfaces

and within the scope of a unified namespace.

In other words, this naming

system provides a logical abstraction over the physical details of

services projecting service endpoints onto the Service Bus. For example,

a given solution’s namespace can have names that represent services

implemented by multiple project teams using any platform and deployed in

separate locations (on-premise or cloud-based).