Tuning service runtime performance will improve the

utilization of individual services as well as the performance of service

compositions that aggregate these services. Even though it is important

to optimize every service architecture, agnostic services in

particular, need to be carefully tuned to maximize their potential for

reuse and recomposition.

Because the logic within a

service is comprised of the collective logic of service capabilities, we

need to begin by focusing on performance optimization on the service

capability level.

In this section we will explore

several approaches for reducing the duration of service capability

processing. The upcoming techniques specifically focus on avoiding

redundant processing, minimizing idle time, minimizing concurrent access

to shared resources, and optimizing the data transfer between service

capabilities and service consumers.

Caching to Avoid Costly Processing

Let’s first look at the elimination of unnecessary processing inside a service capability.

Specifically what we’ll be focusing on is:

avoidance of repeating calculations if the result doesn’t change

avoidance of costly database access if the data doesn’t change

developing a better performing implementation of capability logic

delegating costly capability logic to specialized hardware solutions

avoidance of costly XML transformations by designing service contracts with canonical schemas

A common means of reducing

the quantity of processing is to avoid duplication of redundant

capabilities through caching. Instead of executing the same capability

twice, you simply store the results of the capability the first time and

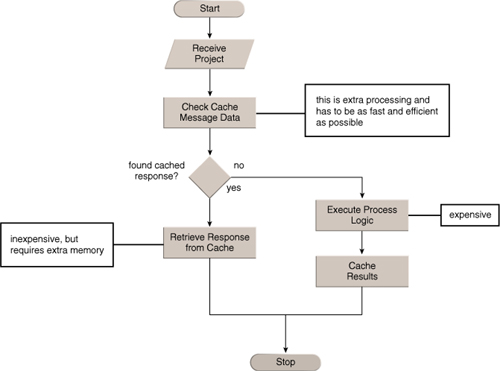

return the stored results the next time they are requested. Figure 1 shows a flow chart that illustrates a simple caching solution.

For example, it doesn’t make

sense to retrieve data from a database more than once if the data is

known to not change (or at least known not to change frequently).

Reading data from a database requires communication between the service

logic and the database. In many cases it even requires communication

over a network connection.

There is a lot of overhead

just in setting up this type of communication and then there’s the

effort of assembling the results of the query in the database. You can

avoid all of this processing by avoiding database calls after the

initial retrieval of the results. If the results change over time, you

can still improve average performance by re-reading every 100 requests

(or however often).

Caching can also be effective for expensive computations, data transformations or service invocations as long as:

results for a given input do not change or at least do not change frequently

delays in visibility of different results are acceptable

the number of computation results or database queries is limited

the same results are requested frequently

a local cache can be accessed faster than a remotely located database

computation of the cache key is not more expensive than computing the output

increased memory requirements due to large caches do not increase paging to disk (which slows down the overall throughput)

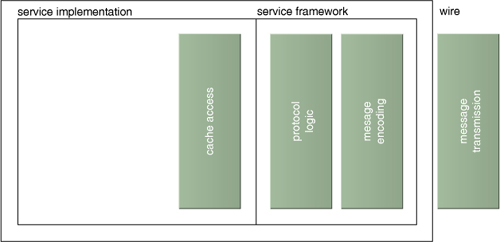

If your service capability meets this criteria, you can remove several blocks from the performance model and replace them with cache access, as shown in Figure 2.

To build a caching solution you can:

explicitly implement caching in the code of the service

intercept incoming messages before the capability logic is invoked

centralize the caching logic into a utility caching service

Each solution has its own

strengths and weaknesses. For example, explicitly implementing caching

logic inside of a service capability allows you to custom-tailor this

logic to that particular capability. In this case you can be selective

about the cache expiration and refresh algorithms or which parameters

make up the cache key. This approach can also be quite labor intensive.

Intercepting messages,

on the other hand, can be an efficient solution because messages for

more than one service capability can be intercepted, potentially without

changing the service implementation at all.

You can intercept messages in several different places:

Intermediary

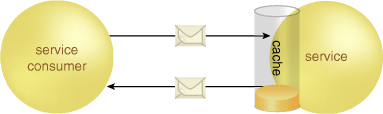

An intermediary between the

service and the consumer can transparently intercept messages, inspect

them to compute a cache key for the parameters, and then only forward

messages to the destination service if no response for the request

parameters is present in the cache (Figure 3). This approach relies on the application of Service Agent.

Service Container

This is a variation of the

previous technique, but here the cache lives inside the same container

as the service to avoid introducing a scalability bottleneck with the

intermediary (Figure 4). Service frameworks, such as ASMX and WCF, allow for the interception of messages with an HTTP Module or a custom channel.

Service Proxy

With

WCF we can build consumer-side custom channels that can make the

caching logic transparent to service consumers and services. Figure 5

illustrates how the cache acts as a service proxy on the consumer side

before sending the request to the service. Note that with this approach

you will only realize significant performance benefits if the same

consumer frequently requests the same data.

Caching Utility Service

An autonomous utility service (Figure 6) can be used to provide reusable caching logic, as per the Stateful Services pattern. For this technique to work, the performance savings of the

caching logic need to outweigh the performance impact introduced by the

extra utility service invocation and communication. This approach can be

justified if autonomy and vendor neutrality are high design priorities.

Comparing Caching Techniques

Each option has its own trade-offs between potential performance increases and additional overhead. Table 1 provides a summary.

Table 1. The pros and cons of different service caching architectures.

| | intermediary | service container | service proxy | utility service |

|---|

| potential savings | medium:

service invocation | medium:

service invocation | high:

service invocation

network access | low:

service invocation |

| extra overhead | high:

computing cache key

additional network hop for cache miss | medium:

computing cache key

additional memory consumption on service | low:

computing cache key

additional memory consumption on service | medium:

computing cache key

additional memory consumption on service |

| efficiency | high:

cache shared between all consumers | high:

cache shared between all consumers | low:

client specific | high:

cache shared between all consumers |

| change impact | none:

intermediaries can be implemented without affecting existing services | low:

server-side configuration file, not service implementation | high:

client-side configuration file, not service implementation | high:

service implementation |

Cache Implementation Technologies

When

you decide on a caching architecture, keep in mind that server-side

message interception can still impact performance because your service

will need to compute a cache key and if it ends up with an oversized

cache, the cache itself can actually decrease performance (especially if

multiple services run on a shared server).

The higher memory

requirements of a service that caches data can lead to increased paging

activity on the server as a whole. Modern 64 bit servers equipped with

terabytes of memory can reduce the amount of paging activity and thus

avoid any associated performance reduction. Hardware-assisted

virtualization further enables you to partition hardware resources and

isolate services running on the same physical hardware from each other.

You can also leverage existing libraries such as the System.Web.Caching namespace for Web applications. Solutions like System.Runtime.Caching

on .NET 4.0 or the Caching Application Block from the Enterprise

Library are available for all .NET-based services. These libraries

include some more specialized caching features, such as item expiration

and cache scavenging. REST services hosted within WCF can leverage

ASP.NET caching profiles for output caching and controlling caching

headers.

Furthermore, a

distributed caching extension is provided with Windows Server AppFabric

that offers a distributed, in-memory cache for high performance

requirements associated with large-scale service processing. This

extension in particular addresses the following problems of distributed

and partitioned caching:

storing cached data in memory across multiple servers to avoid costly database queries

synchronizing cache content across multiple caching nodes for low latency and high scale and high availability

caching partitions for fast look ups and load balancing across multiple caching servers

local

in-memory caching of cache subsets within services to reduce look up

times beyond savings realized by optimizations on the caching tier

You also have several

options for implementing the message interceptor. ASMX and WCF both

offer extensibility points to intercept message processing before the service implementation is invoked. WCF even offers the same extensibility on the service consumer side. Table 2 lists the technology options for these caching architectures.

Table 2. Technology choices for implementing caching architectures.

| | interception | caching |

|---|

| intermediary | ASMX

WCF | caching application block

.NET 4: System.Runtime.Caching

AppFabric |

| service container | ASMX: HTTP Module

WCF: Custom Channel | caching application block

.NET 4: System.Runtime.Caching

AppFabric |

| service proxy | ASMX: Custom Proxy Class

WCF: Custom Channel | caching application block

AppFabric

REST: System.Net.WebClient

REST: System.Net.HttpWebRequest

.NET 4: System.Runtime.Caching |

| utility service | none | caching application block

System.Web.Caching

.NET 4: System.Runtime.Caching

AppFabric |

Computing Cache Keys

Let’s take a closer look at the

moving parts that comprise a typical caching solution. First, we need to

compute the cache key from the request message to check if we already

have a matching response in the cache. Computing a generic key before

the message has been deserialized is straightforward when:

the document format does not vary (for example, there is no optional content)

the messages are XML element-centric and don’t contain data in XML attributes or mixed mode content

the code is already working with XML documents (for example, as with XmlDocument, XmlNode or XPathNavigator objects)

the message design only passes reference data (not fully populated business documents)

the services expose RESTful endpoints where URL parameters or partial URLs contain all reference data

In these situations, you can implement a simple, generic cache key algorithm. For example, you can load the request into an XmlDocument object and get the request data by examining the InnerText

property of the document’s root node. The danger here is that you could

wind up with a very long and comprehensive cache key if your request

message contains many data elements.

Computing a message

type-specific cache key requires much more coding work and you may have

to embed code for each message type. For server-side caching with ASMX

Web services, for example, you would add an HTTP Module to the request

processing pipeline for the service call. Inside the custom module, you

can then inspect data items in the XML message content that uniquely

identifies a service request and possibly bypasses the service call.

For client-side caching with

ASMX, on the other hand, there is no transparent approach for adding

caching logic. Custom proxy classes would have to perform all the

caching-related processing. Depending on requirements and the number of

service consumers, it might be easier to implement caching logic in the

service consumer’s code or switch to WCF for adding caching logic

transparently.

For WCF-based services, you

would define a custom binding with a custom caching channel as part of

the channel stack for either the service or the consumer. A custom

channel allows access to perform capabilities on the Message object. Oftentimes that’s more convenient than programming against the raw XML message.