Orchestrations are the central place where the flow

of business processes are controlled. You could say that the properties

of an orchestration and the current point of execution are the state of a

process instance. Therefore, it makes sense architecturally to manage

process state in the orchestration layer because it promotes the

application of the Service Statelessness principle.

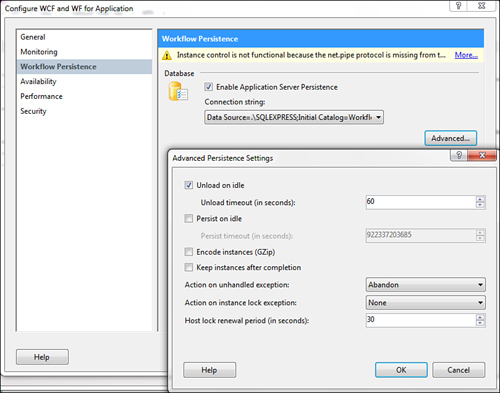

The WF runtime (Figure 1) implements State Repository

through its persistence services. These in-memory services save

workflow instances to persistent storage and unload them from memory

when possible in order to free up server resources and improve

scalability.

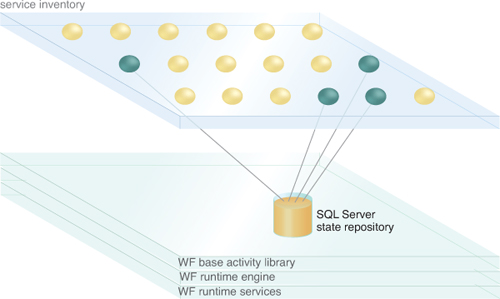

The workflow runtime engine can persist workflows into a database when the workflow goes idle (Figure 2);

for example, when it’s waiting for another message or a response from

another Web service. The persistence operations are executed by a

persistence service that plugs into an extensibility interface in the

runtime engine.

WF3.0 included several

persistence services to customize state management and tailor it to

different needs. For long running processes and higher scalability

requirements, you would likely configure workflows to store state in a

SQL Server database with SqlWorkflowPersistenceService.

You would add the tables and stored procedures required by the SqlWorkflowPersistence

service by running the necessary SQL scripts. Next, you would attach a

persistence service to the workflow runtime either in code or in the

application’s configuration file.

This example adds an SqlWorkflowPersistenceService to the runtime:

Example 1.

using (WorkflowRuntime workflowRuntime =

new WorkflowRuntime())

{

workflowRuntime.AddService(

new SqlWorkflowPersitanceService(true));

}

|

WF 4.0 expands the persistence functionality and evolves the API. The API abstraction is now called an InstanceStore, with a SQL Server-based InstanceStore implementation being part of the .NET framework.

InstanceStores are architected to be more scalable and flexible than WF 3.0 persistence solutions. Some of the benefits of InstanceStores

are explicit management of instance ownership, explicit management of

instance persistence, and a schema allowing for indexed queries of

persisted instances. A Workflow Management Service manages time outs and

cleans out abandoned instances.

SQL Persistence Service and Scaling Out in WF 3.0

The

built-in support for state management is suitable for intra-solution

workflows or smaller scale multi-user applications. These types of

systems typically only receive events from a host application, but not

from multiple sources. Small-scale multi user applications, such as

individual Web services, mostly run on a single server and any given

workflow instance will usually need to only interact with one service

consumer at a time. Neither application type relies on distributing

workflows across multiple computers, which is why WF is a suitable

candidate for building these types of orchestrations. Larger solutions

often require running workflow on multiple servers for robustness and

scalability reasons. Therefore, they need a state management mechanism

that works across multiple machines.

The persistence service

interface defines instance-locking parameters designed to support these

types of distributed scenarios, where multiple distributed workflow

engines can execute the same workflow instance. Instance locking is very

important, because without it, two servers can process two different

messages for the same persisted workflow. Both servers would load the

workflow into memory and execute it and it would be impossible to tell

which of these two instances is valid.

The SqlWorkflowPersistenceService

that ships with the .NET framework for storing workflows in a SQL

Server database supports locking by writing the ID of the runtime engine

where the instance is currently executing into the database.

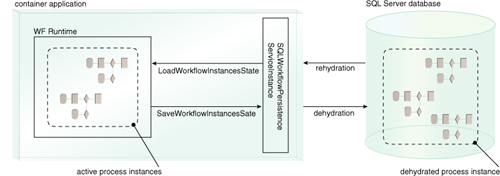

A scale-out feature can

be architected by sharing a persistence database between multiple

workflow servers. Workflows are not bound to a server, so each server

can receive messages for any persisted workflow and load and execute

them because all servers access the same persistence store. The database

can be clustered or mirrored for mission critical solutions to avoid a

single point of failure in the overall service inventory architecture. Figure 3 shows this type of scale out architecture for distributing load across multiple servers.

Let’s look at the parameter list for the constructor of the SqlWorkflowPersistenceService a little more closely. There are several constructor overloads that can be used to customize the behavior of the SqlWorkflowPersistanceService. One in particular provides all the parameters necessary to handle distributed deployments:

Example 2.

string connectionString = "Data Source=localhost;

Initial Catalog=SqlPersistenceService;

Integrated Security=True;

Pooling=False";

bool unloadOnIdle = true;

TimeSpan ownershipDuration = TimeSpan.MaxValue;

TimeSpan loadingInterval = new TimeSpan(0, 2, 0);

SqlWorkflowPersistenceService persistenceService =

new SqlWorkflowPersistenceService(

connectionString, unloadOnIdle,

ownershipDuration, loadingInterval);

|

The most important parameter in a scale out scenario is UnloadOnIdle. You must set it to “true” for the runtime engine to persist the workflow when it’s waiting to receive messages.

The SqlWorkflowPersistenceService

locks an orchestration instance while it’s actively running. If the

service receives another message for a currently running instance, the

attempt to load the locked instance fails and the WF runtime throws a WorkflowOwnershipException.

You can configure the

persistence service to retry delivering the message when an instance is

locked by setting the service’s EnableRetries property to “true.” The

persistence service then retries to load the locked instance and deliver

the message. However, if the workflow isn’t in a state to process the

message it will throw an exception. Thus, retrying only works if the

workflow is in the right state to process the message after it becomes

available.

A Web server thread is

also locking while the persistence service is retrying to post the

message. Therefore, carefully consider whether this setting is the right

approach. A decoupled, store-forward approach, for example, could

provide a more scalable and robust approach because it avoids tying up

server threads. However, to custom build the store-forward and retry

logic instead of using built-in functionality would be necessary to

fulfill this approach.

SQL Persistence Service and Scaling Out in WF 4

WF 4.0 InstanceStores are designed for distributed scale-out scenarios. Instance-Stores

and the Workflow Management Service not only manage instance locking by

different hosts, but also process management and abandoned instances.

InstanceStore is associated with a workflow host (WorkflowApplication or WorkflowServiceHost) by assigning an InstanceStore object to the InstanceStore property, as shown here:

Example 3.

using System.Activities.DurableInstancing;

WorkflowServiceHost host = new WorkflowServiceHost(...);

host.DurableInstancingOptions.InstanceStore =

new SqlWorkflowInstanceStore(...);

– or –

using System.Activities.DurableInstancing;

WorkflowApplication app = new WorkflowApplication(...);

app.InstanceStore = new SqlWorkflowInstanceStore(...);

|

When hosting a workflow in IIS/WAS, where the WorkflowServiceHost is not directly accessible, the InstanceStore can also be configured as a serviceBehavior in the service’s Web.Config file.

Example 4.

<serviceBehaviors>

<behavior>

...

<sqlWorkflowInstanceStore

connectionString="Data Source=.\SQLEXPRESS;Initial

Catalog=WorkflowInstanceStore;Integrated

Security=True;Async=true"instanceEncodingOption="None"

instanceCompletionAction="DeleteAll"

instanceLockedExceptionAction="AggressiveRetry"

hostLockRenewalPeriod="00:00:30">

</sqlWorkflowInstanceStore>

</behavior>

</serviceBehaviors>

|

The configuration options of the SQL InstanceStore

are similar to the persistence service in WF 3.0, but offer additional

customization on storage format, clean-up and retry behavior. The instanceEncodingOption

controls the storage format. If the option is set to gzip, instances

can be stored in gzip format to save space in the database.

The instanceCompletionAction, instanceLockedExceptionAction, and hostLockRenewalPeriod help with InstanceStore maintenance. The instanceCompletionActioninstanceLockedExceptionAction selects between deleting instances when a workflow completes or, keeping them around—for example, to archive later. The specifies what happens when the service tries to load a workflow instance that is currently owned by another host.

Hosts lock instances while they are loaded and release the lock if an

instance is unloaded during idle periods. In load-balanced set-ups,

multiple hosts may receive requests for a specific workflow instance,

but an instance may still be loaded in a different host. If the instanceLockedExceptionAction property is set to SimpleRetry or AggressiveRetry,

the host will retry to load a locked instance instead of returning a

fault to the caller. The two options differ in the algorithm to

determine the time interval between retry attempts.

The hostLockRenewalPeriod

is a safeguard against crashed host processes. A simple boolean flag to

indicate a lock does not allow a fail-over scenario when a new host

loads instances in a crashed host. With the renewal based locking in WF

4.0, hosts have to actively renew their locks. Instances can be loaded

into other hosts or garbage collected by the Workflow Management Service

if locks don’t get renewed before the lock expires.

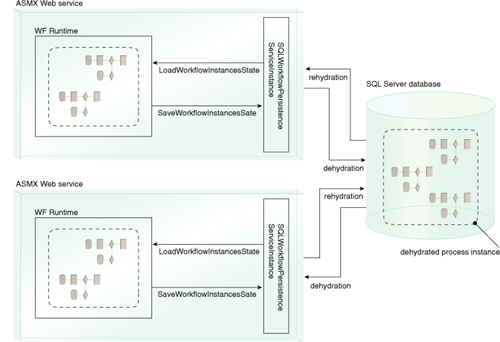

The SqlWorkflowInstanceStore

class exposes properties to set these options in code. Hosting Workflow

Services, with the AppFabric extensions installed, allows managing

these options through the IIS management UI as shown in Figure 4.