The team structure outlined

previously gives the basics for forming and managing a large group of

developers. It also allows the team to scale outwards or inwards

depending on new requirements being introduced or having features move

out of scope. The key takeaways from this model are the following:

Break the solution down into functional groups or subsystems. Assign and manage deliverables based on these groupings.

For

projects that require common infrastructure, create a separate team

that is responsible for creating and managing this. Ensure that the

design for any common components is well defined and used by other

functional teams.

Assign developers to

either a functional team or to the common infrastructure team. Assigning

developers to more than one team is often problematic as it forces them

to split time for multiple deliverables.

Rotate

developers across teams when deliverables are complete. This encourages

cross-group collaboration and decreases "knowledge silos."

Encourage

a regular build cycle. This will help to keep the project on track and

gives the team members regular code check-in dates that must be met.

On a BizTalk development project, these concepts

become even more important. Most BizTalk architects do not take the time

necessary to determine how to properly structure the application so

that it can be coded using a model like the one defined earlier.

Likewise, very few map out what common infrastructure will be needed and

what types of artifacts are "feature specific" and which are common

infrastructure. Following is an exercise that illustrates this.

|

List what features are needed for the solution to

be implemented in this exercise scenario. Then list what common

components will be required for each subsystem.

Scenario:

ABC Company, Inc., is creating a new solution

using BizTalk Server. The system is an order fulfillment application

that will receive order information from the public web site, a retail

POS (Point of Sale) system, and a custom bulk order solution that is

used by large customers. Only customers in good standing are eligible

for automatic fulfillment, and presently the project is only piloting

customers in four geographic regions. If a customer does not meet the

requirements for automatic fulfillment, the order is rejected and

manually fulfilled. For orders that can be autofulfilled, the solution

must first check the stock availability for each product by an SAP ERP

system using a custom API. If stock is not available, it must decide

whether the order can be split into multiple shipments and fulfill each

separately. If the order cannot be split shipped, it must be rejected

and processed manually. If an order can be fulfilled, it must update the

billing and shipping systems appropriately. The shipping system is a

legacy mainframebased application that requires custom code to be

executed to properly authenticate and send transactions to it.

|

Possible Solution:

In this scenario, the most logical approach would

be to separate the solution based on the requirements. There are three

key features of this solution:

Order taking from external sources (POS, web site, and bulk orders)

Stock checking and rules associated with split shipments

Updating downstream systems

Each subsystem will have its own pieces and

artifacts; however, all of them will need to use the following types of

core components:

Access to customer information

Common schema to define customer information

Standard way to get access to that customer information

Executing customer rules associated with automatic fulfillment

Coordination to ensure that the fulfillment process is handled in the proper order

Order rejection subsystem

Standard way to process exceptions and errors

In this scenario, the solution can be implemented using the architecture in Figure 1.

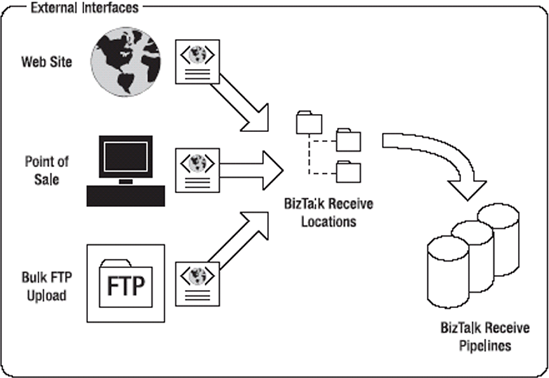

Feature 1: External Interfaces

This subsystem is responsible for receiving

inbound messages from the three external interfaces. Here, the external

interfaces can be XML messages, flat-file messages, or custom inbound

API calls.

In any case, this subsystem will need to parse the inbound document and

transform it to a common schema that represents an "order" within this

solution. This order schema will be used by all other subsystems. In the

case of the bulk upload, the subsystem will be required to create

individual orders based on the entire payload of messages stored within

the order file.

This scenario can be implemented using receive

ports within BizTalk along with several receive locations. Each receive

location will define a custom receive pipeline if the document needs to

be examined and/or disassembled before being processed. The port would

then have different BizTalk transformations assigned to it to allow it

to map the inbound system order schema to the common system order schema

that is used by all subsystems.

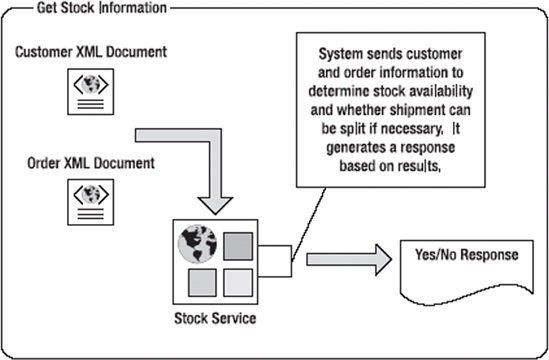

Feature 2: Check Stock and Associated Rules

The stock-checking subsystem will be responsible for calling the customer lookup system, as shown in Figure 2.

This will also check whether or not the order can be fulfilled and

return the response. Note that in this scenario, the coordination of

calling the order rejection system as well as the coordination of this

process into the larger fulfillment process is left to the

responsibility of the common infrastructure. This subsystem is

responsible for determining whether an order can be fulfilled and is not

responsible for how or why this information is needed. This is often

referred to as black-boxing a

solution. This will allow the subsystem to be built in isolation of any

other subsystem. Only integration points are needed to be defined before

development is started, in this case, the schemas for an order and a

customer as well as the format of the response.

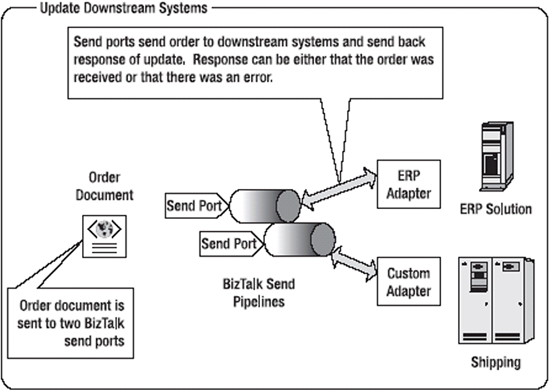

Feature 3: Update Downstream Systems

To update the downstream ERP and shipping

systems, the validated order will need to be sent via a BizTalk send

port via the appropriate adapter. This is demonstrated in Figure 3.

The adapter to be used depends on what downstream system is going to be

updated. In the case of the ERP system, if this were an SAP

application, the port would use the SAP adapter. The same would hold

true if it were an MQSeries queue—the port would use an MQSeries

adapter. For the custom shipping solution, a custom adapter will need to

be created if no off-the-shelf adapter is available. Note that in this

system, the send pipelines are responsible for packaging the message

into its appropriate format, adding any security information such as

digital certificates, and encoding it properly so that the downstream

system can read the order information. The send ports also return a

response message back that indicates whether or not the update was

successful. What to do with that response message is the responsibility

of the caller, not the pipeline, adapter, or send port.

These common subsystems also need to be built: