To manage crawls, you must understand the differences

between full and incremental crawls. A full crawl will follow the

instructions of the content source and the crawl rules to crawl the

entire content source according to the content type, whether

hierarchical, enumerated list, or link traversal. A full crawl will

replace the current index for that content source and give you a new

index. However, because some full crawls take many hours, the old index

for that content source will remain on the index and query servers to

meet query demand by your users and is only replaced after the full

crawl has successfully completed. This means that, for a brief length of

time, you’ll have two full indexes of the same content source existing

on your hard drives. Be sure you plan for enough disk space for

committing full crawls.

What is crawled during an

incremental crawl depends on the content type and how changes are

detected for that content type. For a file system crawl or normal Web

crawls, the date/time stamp is compared to a crawl history log. However,

for SharePoint incremental crawls, the change logs maintained in the

content databases are used. SharePoint 2010 now supports a very quick

ACL-only crawl to update security information for index items. Most

databases do not support incremental crawls. FAST technology supports

change notifications from SQL databases that essentially “push” changes

to the crawler, but the SharePoint 2010 Search feature does not.

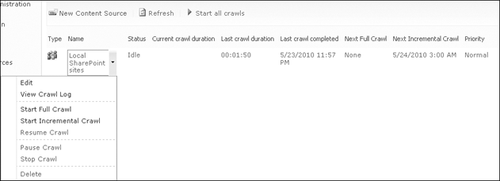

In the following sections, you’ll learn how to manage

crawls from the Manage Content Sources page shown in Figure 1, which presents the tools for managing crawls.

1. Global Crawl Management

Crawls for all content

sources can be managed globally with the toolbar option to Start All

Crawls, which changes to Stop All Crawls and Pause All Crawls after

crawls are started. The type of crawl initiated by the Start All Crawls

option depends on several factors.

It would follow the next crawl scheduled for each content source whether it is a full or incremental crawl.

If a crawl has been paused, then that crawl will be resumed.

If

no crawl is scheduled and a full crawl has been completed, then an

incremental crawl is started. However, remember that the first crawl of

any content source is always a full crawl.

If

either type of crawl has been stopped, the next crawl will always be a

full crawl. Therefore, careful consideration should be given to the

impact of using the Stop All Crawls tool.

The

indexing process can always force a full crawl if it determines that

enough errors exist in the index that an incremental crawl may not

correct them.

Note:

Although the crawl process

is read-only and does not modify the files, it will change the last read

date on some files, which can impact access auditing.

2. Content Source Crawl Management

The context menu of each content source presents crawl management tools. You can start both full and incremental crawls

from the context menu. You can also use the menu to pause, resume, or

stop an active crawl. Remember that any time a crawl is stopped or does

not complete for any reason, the next crawl of that content source will

be a full crawl, because the information in the crawl log and markers

set on the change logs are considered inaccurate. When a crawl is

paused, the instructions for the crawl and the information about the

crawl are retained in memory on the host of the crawl component for use

when the crawl is resumed.

3. User Crawl Management

SharePoint crawlers have always

obeyed “Do Not Crawl” instructions embedded in Web content. SharePoint

2010 continues to offer content owners of lists, libraries, and sites

the ability to add these instructions through the user interface and

eliminate their content from search indexes. Site collection

administrators can also flag site columns (metadata) to keep them from

being crawled. Personally identifiable information (PII) is an example

of information that should not be indexed on public sites. Be sure to

have clear policies regarding what type of content should or should not

appear in your index.

4. Scheduling Crawls

The management of crawl

schedules is an ongoing process that may require daily monitoring and

tweaking. The Manage Content Sources page presents information on the

duration of the current and last crawl but does not indicate the type of

crawl involved.

However, the Crawl History view

of the crawl logs itemizes each crawl’s start and end times with the

calculated duration as well as the activity accomplished during the

crawl. This information permits search administrators to adjust the

crawl schedules as the corpus grows so that a crawl can complete

successfully before the next crawl begins. Crawls must be scheduled as

often as needed to meet the “freshness” requirements of your

organization. You might need to adjust the topology of your search

service to add resources to complete crawls often enough to meet these

needs. When determining additional resources, consider the impact the

additions will have on the WFEs being crawled and on the SQL servers

hosting the content and search databases.

With the improvements

in incremental crawl instructions, you may only schedule full crawls

when required instead of on a regular basis. The crawl component can

itself switch to a full crawl if

A search application administrator stopped the previous crawl or the previous crawl did not complete for any reason.

A

content database was restored from backup without the appropriate

switch on the STSADM –restore operation that allows the farm

administrators to restore a content database without forcing a full

crawl.

A farm administrator has detached and reattached a content database.

A full crawl of the content source has never been done.

The

change log does not contain entries for the addresses that are being

crawled. Without entries in the change log for the items being crawled,

incremental crawls cannot occur.

Depending on the severity of the corruption, the index server might force a full crawl if corruption is detected in the index.

Finally, when is a full crawl required?

When a search application administrator added a new managed property.

To re-index ASPX pages on Windows SharePoint Services 3.0 or SharePoint Server 2007 sites.

Note:

Incremental crawls

do not re-index views or home pages when content within the page has

changed, such as the deletion of individual list items. This is because

of the inability of the crawler to detect when ASPX pages on SharePoint

sites have changed. You should periodically do full crawls of sites that

contain ASPX files to ensure that these pages are re-indexed unless you

have the site configured to not have ASPX pages crawled. This behavior

is the same as in previous versions of SharePoint.

To

resolve consecutive incremental crawl failures. The index server has

been reported to remove content that could not be accessed in 100

consecutive attempts.

When crawl rules have been added, deleted, or modified.

To repair a corrupted index.

When the search services administrator has created one or more server name mappings.

When

the account assigned to the default content access account or crawl

rule account has changed. This also automatically triggers a full crawl.

Account password changes do not require or trigger a full crawl.

When file types and/or iFilters have been installed and the new content needs to be indexed.