Insufficient RAM is a common problem in SQL Server

systems experiencing performance problems. Fortunately, RAM is both

reasonably inexpensive and relatively easy to upgrade.

There are

some important considerations when selecting and configuring server RAM,

such as the module capacity and fault tolerance, and latency issues on

large, multi-CPU systems. In this section, we'll look at configuring a

server's RAM slots and take a brief look at the NUMA architecture, which

is used to derive maximum performance in large, multi-CPU systems.

1. Design for future RAM upgrades

When selecting and

configuring RAM for SQL Server, you must consider the amount, type, and

capacity of the chosen RAM. If the server will be used for future

system consolidation, or the exact RAM requirements can't be accurately

predicted, then apart from loading the system up with the maximum

possible memory, it's important to allow for future memory upgrades.

Virtualization, addresses this issue nicely by being able

to easily grant and revoke CPU/memory resources as the server's needs

increase or decrease. On dedicated, nonvirtualized systems, this issue

is typically addressed by using fewer higher-capacity memory chips,

therefore leaving a number of free slots for future upgrades if

required. This avoids the common problem of having to remove and replace

lower-capacity RAM chips if the server's RAM slots are full and more

memory is required. Although initially more expensive, this approach

provides flexibility for future requirements.

Finally, in order to provide a system with a degree of resilience against memory errors, error-correcting code

(ECC) RAM should be installed. Used by all the major system vendors,

ECC forms an important part of configuring a fault-tolerant SQL Server

system.

Table 1 shows the maximum memory supported by SQL Server 2008 running on Windows Server 2008.

Table 1. Maximum memory for SQL Server 2008 editions

| | Maximum supported memory |

|---|

| SQL Server 2008 version | 32-bit | 64-bit |

|---|

| Enterprise | OS max | OS max |

| Standard | OS max | OS max |

| Web | OS max | OS max |

| Workgroup | OS max | 4GB |

| Express | 1GB | 1GB |

| Windows Server 2008 | 32-bit | 64-bit |

| Data Center | 64GB | 2TB |

| Enterprise | 64GB | 2TB |

| Standard | 4GB | 32GB |

| Web Server | 4GB | 32GB |

| Itanium | N/A | 2TB |

Despite memory being

significantly faster than disk, a large multi-CPU system may bottleneck

on access to the memory, a situation addressed by the NUMA architecture.

2. NUMA

As we mentioned earlier,

advances in CPU clock speed have given way to a trend toward multiple

cores per CPU die. That's not to say clock speeds won't increase in the

future—they most certainly will—but we're at the point now where it's

become increasingly difficult to fully utilize CPU clock speed due to

the latency involved in accessing system RAM. On large multiprocessor

systems where all CPUs share a common bus to the RAM, the latency of RAM

access becomes more and more of an issue, effectively throttling CPU

speed and limiting system scalability. A simplified example of this is

shown in figure 1.

As we covered

earlier, higher amounts of CPU cache will reduce the frequency of trips

out to system RAM, but there are obviously limits on the size of the CPU

cache, so this only partially addresses the RAM latency issue.

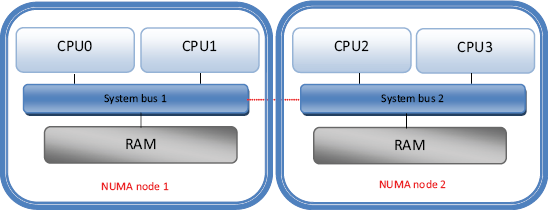

The non-uniform memory access (NUMA) architecture, fully supported by SQL Server, addresses this issue by grouping CPUs together into NUMA nodes, each of which accesses its own RAM, and depending on the NUMA implementation, over its own I/O channel.

In contrast, the symmetric

multiprocessor architecture has no CPU/RAM segregation, with all CPUs

accessing the same RAM over the same shared memory bus. As the number of

CPUs and clock speeds increase, the symmetric multiprocessor

architecture reaches scalability limits, limits that are overcome by the

NUMA architecture; a simplified example appears in figure 2.

While the

NUMA architecture localizes RAM to groups of CPUs (NUMA nodes) over

their own I/O channels, RAM from other nodes is still accessible. Such

memory is referred to as remote memory.

In the NUMA architecture, accessing remote memory is more costly

(slower) than local memory, and applications that aren't NUMA aware

often perform poorly on NUMA systems. Fortunately, SQL Server is fully

NUMA aware.

On large multi-CPU

systems running multiple SQL Server instances, each instance can be

bound to a group of CPUs and configured with a maximum memory value. In this way, SQL Server instances can be tailored for a particular NUMA

node, increasing overall system performance by preventing remote memory

access while benefiting from high-speed local memory access.

Hardware

NUMA

The NUMA architecture just described is known as hardware NUMA, also referred to as hard NUMA.

As the name suggests, servers using hardware NUMA are configured by the

manufacturer with multiple system buses, each of which is dedicated to a

group of CPUs that use the bus to access their own RAM allocation.

Some hardware vendors supply NUMA servers in interleaved NUMA

mode, in which case the system will appear to Windows and SQL Server as

an SMP box. Interleaved NUMA is suitable for applications that aren't

NUMA optimized. For SQL Server systems, pure NUMA

mode should be considered to take advantage of NUMA optimizations if

appropriate. The sys.dm_os_memory_clerks Dynamic Management View (DMV)

can be used to determine the NUMA mode:

-- TSQL to return the set of active memory clerks

SELECT DISTINCT memory_node_id

FROM sys.dm_os_memory_clerks

If node 0 is the only

memory node returned from this query, the server may be configured in

interleaved NUMA mode (or isn't NUMA hardware). Servers not configured

for hardware NUMA (SMP servers) that contain lots of CPUs may benefit

from software-based NUMA, or soft NUMA, which we'll look at next.

Soft

NUMA

Unlike hardware NUMA, soft

NUMA isn't able to isolate, or affinitize, RAM to groups of CPUs over

dedicated buses. However, in some cases system performance may increase

by enabling soft NUMA.

On SMP systems

without soft NUMA, each SQL Server instance has a single I/O thread and a

single LazyWriter thread. Instances experiencing bottlenecks on these

resources may benefit from configuring multiple NUMA nodes using soft

NUMA, in which case each node will receive its own I/O and LazyWriter

threads.

Soft

NUMA in SQL

Server

Configuring a SQL

Server instance for soft NUMA is a two-step process. First, the instance

is configured with CPU affinity, as in this example, which configures

an instance to use CPUs 0–3:

-- Configure an Instance to use CPUs 0-3

sp_configure 'show advanced options', 1;

RECONFIGURE;

GO

sp_configure 'affinity mask', 15;

RECONFIGURE;

GO

The next step is to

configure the NUMA nodes, which is done at a server level—enabling all

the defined NUMA nodes to be visible to all SQL instances on the server.

A NUMA node is defined in the registry with its corresponding CPUs by

adding node keys to HKEY_LOCAL_MACHINE\SOFTWARE\Microsoft\Microsoft SQL

Server\100\NodeConfiguration.

Suppose we want to

create two NUMA nodes for a given SQL Server instance. In our previous

example, we used the affinity mask option to affinitize CPUs 0, 1, 2,

and 3. To create two NUMA nodes on those four CPUs, we'd add the

registry keys, as shown in table 2.

Table 2. Registry entries used to define NUMA nodes

| Key | Type | Name | Value |

|---|

| Node0 | DWORD | CPUMask | 0x03 |

| Node1 | DWORD | CPUMask | 0x0c |

In this case, CPUs 0 and

1 would be used by the first NUMA node (Node 0) and CPUs 2 and 3 would

be used by NUMA Node 1. The hexadecimal equivalents of the binary bit

masks are stored in the registry—that is, 0x03 (bit mask 00000011, hex

equivalent of 3) for CPUs 0 and 1, and 0x0c (bit mask 00001100, hex

equivalent of 12) for CPUs 2 and 3. In this example, the combination of

CPU affinity and the registry modifications have provided a SQL Server

instance with two soft NUMA nodes.