Maintaining

Windows Server 2008 R2 systems isn’t an easy task for administrators.

They must find time in their firefighting efforts to focus and plan for

maintenance on the server systems. When maintenance tasks are

commonplace in an environment, they can alleviate many of the common

firefighting tasks.

The processes and

procedures for maintaining Windows Server 2008 R2 systems can be

separated based on the appropriate time to maintain a particular aspect

of Windows Server 2008 R2. Some maintenance procedures require daily

attention, whereas others might require only quarterly checkups. The

maintenance processes and procedures that an organization follows depend

strictly on the organization; however, the categories described in the

following sections and their corresponding procedures are best practices

for organizations of all sizes and varying IT infrastructures.

Daily Maintenance

Certain maintenance

procedures require more attention than others. The procedures that

require the most attention are categorized into the daily procedures.

Therefore, it is recommended that an administrator take on these

procedures each day to ensure system reliability, availability,

performance, and security. These procedures are examined in the

following three sections.

Checking Overall Server Functionality

Although

checking the overall server health and functionality might seem

redundant or elementary, this procedure is critical to keeping the

system environment and users working productively.

Some questions that should be addressed during the checking and verification process are the following:

Can users access data on file servers?

Are printers printing properly? Are there long queues for certain printers?

Is there an exceptionally long wait to log on (that is, longer than normal)?

Can users access messaging systems?

Can users access external resources?

Verifying That Backups Are Successful

To provide a secure and

fault-tolerant organization, it is imperative that a successful backup

be performed each night. In the event of a server failure, the

administrator might be required to perform a restore from tape. Without a

backup each night, the IT organization will be forced to rely on

rebuilding the server without the data. Therefore, the administrator

should always back up servers so that the IT organization can restore

them with minimum downtime in the event of a disaster. Because of the

importance of the backups, the first priority of the administrator each

day needs to be verifying and maintaining the backup sets.

If disaster ever strikes,

the administrators want to be confident that a system or entire site can

be recovered as quickly as possible. Successful backup mechanisms are

imperative to the recovery operation; recoveries are only as good as the

most recent backups.

Monitoring Event Viewer

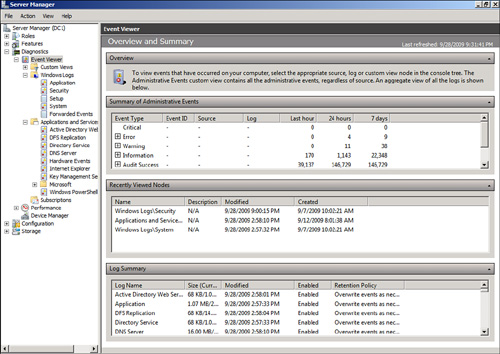

Event Viewer is used to

check the system, security, application, and other logs on a local or

remote system. These logs are an invaluable source of information

regarding the system. The Event Viewer Overview and Summary page in

Server Manager is shown in Figure 1.

Note

Checking these logs often

helps your understanding of them. There are some events that constantly

appear but aren’t significant. Events will begin to look familiar, so

you will notice when something is new or amiss in your event logs.

All Event Viewer events

are categorized either as informational, warning, or error. Some best

practices for monitoring event logs include the following:

Understanding the events that are being reported

Setting up a database for archived event logs

Archiving event logs frequently

To

simplify monitoring hundreds or thousands of generated events each day,

the administrator should use the filtering mechanism provided in Event

Viewer. Although warnings and errors should take priority, the

informational events should be reviewed to track what was happening

before the problem occurred. After the administrator reviews the

informational events, she can filter out the informational events and

view only the warnings and errors.

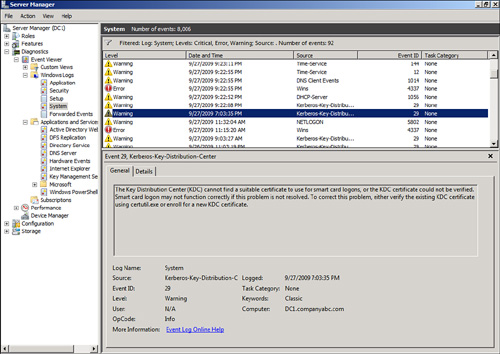

To filter events, do the following:

1. | Expand the Event View folder in Server Manager.

|

2. | Select the log from which you want to filter events.

|

3. | Right-click the log and select Filter Current Log.

|

4. | In

the log properties window, select the types of events to filter. In

this case, select the Critical, Error, and Warning check boxes.

|

5. | Click OK when you’re done.

|

Figure 2

shows the results of filtering on the system log. You can see in the

figure that there are a total of 8,006 events. In the message above the

log, the filter is noted and also the 92 resulting number of events. The

filter reduced the events by a factor of over 80 to 1. This really

helps reduce the volume of data that an administrator needs to review.

Some

warnings and errors are normal because of bandwidth constraints or

other environmental issues. The more you monitor the logs, the more

familiar you will become with the messages and, therefore, the more

likely you will be able to spot a problem before it affects the user

community.

Tip

You might need to increase the

size of the log files in Event Viewer to accommodate an increase in

logging activity. The default log sizes are larger in Windows Server

2008 R2 than in previous versions of Windows, which were notorious for

running out of space.

Weekly Maintenance

Maintenance

procedures that require slightly less attention than daily checking are

categorized in a weekly routine and are examined in the following

sections.

Checking Disk Space

Disk space is a precious

commodity. Although the disk capacity of a Windows Server 2008 R2 system

can be virtually endless, the amount of free space on all drives should

be checked at least weekly if not more frequently. Serious problems can

occur if there isn’t enough disk space.

One of the most common disk

space problems occurs on data drives where end users save and modify

information. Other volumes such as the system drive and partitions with

logging data can also quickly fill up.

As mentioned earlier, lack of free disk space can cause a multitude of problems, including, but not limited to, the following:

To prevent these problems from occurring, administrators should keep the amount of free space to at least 25%.

Caution

If you need to free disk space,

you should move or delete files and folders with caution. System files

are automatically protected by Windows Server 2008 R2, but data is not.

Verifying Hardware

Hardware components

supported by Windows Server 2008 R2 are reliable, but this doesn’t mean

that they’ll always run continuously without failure. Hardware

availability is measured in terms of mean time between failures (MTBF)

and mean time to repair (MTTR). This includes downtime for both planned

and unplanned events. These measurements provided by the manufacturer

are good guidelines to follow; however, mechanical parts are bound to

fail at one time or another. As a result, hardware should be monitored

weekly to ensure efficient operation.

Hardware can be monitored

in many different ways. For example, server systems might have internal

checks and logging functionality to warn against possible failure,

Windows Server 2008 R2’s System Monitor might bring light to a hardware

failure, and a physical hardware check can help to determine whether the

system is about to experience a problem with the hardware.

If a failure has occurred

or is about to occur, having an inventory of spare hardware can

significantly improve the chances and timing of recoverability. Checking

system hardware on a weekly basis provides the opportunity to correct

the issue before it becomes a problem.

Running Disk Defragmenter

Whenever files are created,

deleted, or modified, Windows Server 2008 R2 assigns a group of clusters

depending on the size of the file. As file size requirements fluctuate

over time, so does the number of groups of clusters assigned to the

file. Even though this process is efficient when using NTFS, the files

and volumes become fragmented because the file doesn’t reside in a

contiguous location on the disk.

As fragmentation

levels increase, disk access slows. The system must take additional

resources and time to find all the cluster groups to use the file. To

minimize the amount of

fragmentation and give performance a boost, the administrator should

use the Disk Defragmenter to defragment all volumes. The Disk Defragmenter is a built-in utility that

can analyze and defragment volume fragmentation. Fragmentation

negatively affects performance because files aren’t efficiently read

from disk. There is a command-line version of the tool and a graphical

user interface version of the tool.

To use the graphical user interface version of the Disk Defragmenter, do the following:

1. | Start Disk Defragmenter by choosing Start, Run.

|

2. | Enter dfrgui and click OK.

|

3. | The

tool automatically analyzes all the drives and suggests whether to

defragment. This only happens if disk defragmentation is not scheduled

to run automatically.

|

4. | Select the volumes to defragment.

|

5. | Click Defragment Disk to defragment immediately.

|

6. | The

defragmentation runs independently of the Disk Defragmenter GUI, so you

can exit the tool while the defragmentation is running by clicking

Close.

|

Unlike previous versions

of the software, the Windows Server 2008 R2 Disk Defragmenter does not

show a graphical view of the Disk Defragmenter.

The Disk Defragmenter also

enables the administrator to set up a schedule for the backup. This

modifies the ScheduledDefrag task in the Task Scheduler (located in Task Scheduler\Task Scheduler Library\Microsoft\Windows\Defrag\).

After selecting the Run on a Schedule option, the schedule can be set

by clicking the Modify Schedule button and the volumes to be

defragmented can be selected by clicking the Select Volumes button. New

volumes will automatically be defragmented by the task.

Running the Domain Controller Diagnosis Utility

The Domain Controller

Diagnosis (DCDIAG) utility is installed with the Active Directory Domain

Services roles in Windows Server 2008 R2 and is used to analyze the

state of a domain controller (DC) and the domain services. It runs a

series of tests, analyzes the state of the DC, and verifies different

areas of the system, such as the following:

Connectivity

Replication

Topology integrity

Security descriptors

Netlogon rights

Intersite health

Roles

Trust verification

DCDIAG should be run on each DC on a weekly basis or as problems arise. DCDIAG’s syntax is as follows:

dcdiag.exe /s:<Directory Server>[:<LDAP Port>] [/u:<Domain>\<Username>

/p:*|<Password>|""]

[/hqv] [/n:<Naming Context>] [/f:<Log>] [/x:XMLLog.xml]

[/skip:<Test>] [/test:<Test>]

Parameters for this utility are as follows:

/h— Display this help screen.

/s— Use <Domain Controller> as the home server. This is ignored for DCPromo and RegisterInDNS tests, which can only be run locally.

/n— Use <Naming Context> as the naming context to test. Domains can be specified in NetBIOS, DNS, or distinguished name (DN) format.

/u— Use domain\username credentials for binding with a password. Must also use the /p option.

/p— Use <Password> as the password. Must also use the /u option.

/a— Test all the servers in this site.

/e— Test all the servers in the entire enterprise. This parameter overrides the /a parameter.

/q— Quiet; print only error messages.

/v— Verbose; print extended information.

/i— Ignore; ignore superfluous error messages.

/fix— Fix; make safe repairs.

/f— Redirect all output to a file <Log>; /ferr will redirect error output separately.

/ferr:<ErrLog>— Redirect fatal error output to a separate file <ErrLog>.

/c— Comprehensive; run all tests, including nondefault tests but excluding DCPromo and RegisterInDNS. Can use with /skip.

/skip:<Test>— Skip the named test. Do not use in a command with /test.

/test:<Test>— Test only the specified test. Required tests will still be run. Do not use with the /skip parameter.

/x:<XMLLog.xml>— Redirect XML output to <XMLLog.xml>. Currently works with the /test:dns option only.

/xsl:<xslfile.xsl or xsltfile.xslt>— Add the processing instructions that reference a specified style sheet. Works with the /test:dns /x:<XMLLog.xml> option only.

The command supports a

variety of tests, which can be selected. Some tests are run by default

and others need to be requested specifically. The command line supports

selecting tests explicitly (/test) and skipping tests (/skip). Table 1 shows valid tests that can be run consistently.

Table 1. DCDIAG Tests

| Test Name | Description |

|---|

| Advertising | Checks whether each DC is advertising itself and whether it is advertising itself as having the capabilities of a DC. |

| CheckSDRefDom | Checks that all application directory partitions have appropriate security descriptor reference domains. |

| CheckSecurityError | Locates

security errors and performs the initial diagnosis of the problem. This

test is not run by default and has to be requested with the /test option. |

| Connectivity | Tests

whether DCs are DNS registered, pingable, and have LDAP/RPC

connectivity. This is a required test and cannot be skipped with the /skip option. |

| CrossRefValidation | This test looks for cross-references that are in some way invalid. |

| CutoffServers | Checks

for servers that won’t receive replications because their partners are

down. This test is not run by default and has to be requested with the /test option. |

| DCPromo | Tests the existing DNS infrastructure for promotion to the domain controller. |

| DNS | Checks the health of DNS settings for the whole enterprise. This test is not run by default and has to be requested with the /test option. |

| FrsEvent | Checks

to see if there are any operation errors in the file replication server

(FRS). Failing replication of the sysvol share can cause policy

problems. |

| DFSREvent | Checks to see if there are any operation errors in the DFS. |

| DFSREvent | Checks to see if there are any operation errors in the DFS. |

| LocatorCheck | Checks that global role holders are known, can be located, and are responding. |

| Intersite | Checks for failures that would prevent or temporarily hold up intersite replication. |

| Kccevent | Checks that the Knowledge Consistency Checker is completing without errors. |

| KnowsOfRoleHolders | Checks whether the DC thinks it knows the role holders of the five FSMO roles. |

| MachineAccount | Checks to see whether the machine account has the proper information. Use the /RecreateMachineAccount parameter to attempt a repair if the local machine account is missing. Use /FixMachineAccount if the machine’s account flags are incorrect. |

| NCSecDesc | Checks that the security descriptors on the naming context heads have appropriate permissions for replication. |

| NetLogons | Checks that the appropriate logon privileges allow replication to proceed. |

| ObjectsReplicated | Checks that machine account and DSA objects have replicated. You can use /objectdn:<dn> with /n:<nc> to specify an additional object to check. |

| OutboundSecureChannels | Verifies that secure channels exist from all the DCs in the domain to the domains specified by /testdomain. The /nositerestriction/test option.

parameter prevents the test from being limited to the DCs in the site.

This test is not run by default and has to be requested with the |

| RegisterInDNS | Tests

whether this domain controller can register the Domain Controller

Locator DNS records. These records must be present in DNS for other

computers to locate this domain controller for the <Active_Directory_Domain_DNS_Name> domain. Reports whether any modifications to the existing DNS infrastructure are required. Requires the /DnsDomain:<Active_Directory_Domain_DNS_Name> argument. |

| Replications | Checks for timely replication between domain controllers. |

| RidManager | Checks to see whether RID master is accessible and whether it contains the proper information. |

| Services | Checks to see whether DC services are running on a system. |

| Systemlog | Checks that the system is running without errors. |

| Topology | Checks

that the generated topology is fully connected for all DCs. This test

is not run by default and has to be requested with the /test option. |

| VerifyEnterpriseReferences | Verifies

that certain system references are intact for the FRS and replication

infrastructure across all objects in the enterprise. This test is not

run by default and has to be requested with the /test option. |

| VerifyReferences | Verifies that certain system references are intact for the FRS and replication infrastructure. |

| VerifyReplicas | Verifies

that all application directory partitions are fully instantiated on all

replica servers. This test is not run by default and has to be

requested with the /test option. |

Monthly Maintenance

It is recommended that you perform the tasks examined in the following sections on a monthly basis.

Maintaining File System Integrity

CHKDSK scans for file system

integrity and can check for lost clusters, cross-linked files, and more.

If Windows Server 2008 R2 senses a problem, it will run CHKDSK

automatically at startup.

Administrators can

maintain FAT, FAT32, and NTFS file system integrity by running CHKDSK

once a month. To run CHKDSK, do the following:

1. | At the command prompt, change to the partition that you want to check.

|

2. | Type CHKDSK without any parameters to check only for file system errors. No changes will be made.

|

3. | If any errors are found, run the CHKDSK utility with the /f parameter to attempt to correct the errors found.

|

Testing the UPS

An uninterruptible power supply

(UPS) can be used to protect the system or group of systems from power

failures (such as spikes and surges) and keep the system running long

enough after a power outage so that an administrator can gracefully shut

down the system. It is recommended that an administrator follow the UPS

guidelines provided by the manufacturer at least once a month. Also,

monthly scheduled battery tests should be performed.

Validating Backups

Once a month, an

administrator should validate backups by restoring the backups to a

server located in a lab environment. This is in addition to verifying

that backups were successful from log files or the backup program’s

management interface. A restore gives the administrator the opportunity

to verify the backups and to practice the restore procedures that would

be used when recovering the server during a real disaster. In addition,

this procedure tests the state of the backup media to ensure that they

are in working order and builds administrator confidence for recovering

from a true disaster.

Updating Documentation

An integral part of

managing and maintaining any IT environment is to document the network

infrastructure and procedures. The following are just a few of the

documents you should consider having on hand:

Server build guides

Disaster recovery guides and procedures

Checklists

Configuration settings

Change configuration logs

Historical performance data

Special user rights assignments

Special application settings

As systems and services are

built and procedures are ascertained, document these facts to reduce

learning curves, administration, and maintenance.

It is not only important to

adequately document the IT environment, but it’s often even more

important to keep those documents up to date. Otherwise, documents can

quickly become outdated as the environment, processes, and procedures

change as the business changes.

Quarterly Maintenance

As the name implies,

quarterly maintenance is performed four times a year. Areas to maintain

and manage on a quarterly basis are typically fairly self-sufficient and

self-sustaining. Infrequent maintenance is required to keep the system

healthy. This doesn’t mean, however, that the tasks are simple or that

they aren’t as critical as those tasks that require more frequent

maintenance.

Checking Storage Limits

Storage capacity on all

volumes should be checked to ensure that all volumes have ample free

space. Keep approximately 25% free space on all volumes.

Running low or completely out of

disk space creates unnecessary risk for any system. Services can fail,

applications can stop responding, and systems can even crash if there

isn’t plenty of disk space.

Changing Administrator Passwords

Administrator passwords

should, at a minimum, be changed every quarter (90 days). Changing these

passwords strengthens security measures so that systems can’t easily be

compromised. In addition to changing passwords, other password

requirements such as password age, history, length, and strength should

be reviewed.