A disk subsystem that includes a RAID configuration

enables the disks in the system to work in concert to improve

performance, fault tolerance, or both.

Implementing Disk Fault Tolerance

Fault tolerance is the ability of a computer or operating system to

respond to a catastrophic event, such as a power outage or hardware

failure, so that no data is lost and that work in progress is not

corrupted. Fully fault-tolerant systems using fault-tolerant disk arrays

prevent the loss of data. You can implement RAID fault tolerance as

either a hardware or software solution.

Hardware Implementations of RAID

In a hardware

solution, the disk controller interface handles the creation and

regeneration of redundant information. Some hardware vendors implement

RAID data protection directly in their hardware, as with disk array

controller cards. Because these methods are vendor specific and bypass

the fault tolerance software drivers of the operating system, they offer

performance improvements over software implementations of RAID.

Consider the following points when deciding whether to use a software or hardware implementation of RAID:

Hardware fault tolerance is more expensive than software fault tolerance and might limit equipment options to a single vendor.

Hardware fault tolerance generally provides faster disk I/O than software fault tolerance.

Hardware

fault tolerance solutions might implement hot swapping of hard disks to

allow for replacement of a failed hard disk without shutting down the

computer and hot sparing so that a failed disk is automatically replaced

by an online spare.

Software Implementations of RAID

Windows Server 2003

supports one RAID implementation (striped, RAID-0) that is not

fault-tolerant and two implementations that provide fault tolerance:

mirrored volumes (RAID-1) and striped volumes with parity (RAID-5). You

can create fault-tolerant RAID volumes only on dynamic disks formatted

with NTFS.

With Windows

Server 2003 implementations of RAID, there is no fault tolerance

following a failure until the fault is repaired. If a second fault

occurs before the data lost from the first fault is regenerated, you can

recover the data only by restoring it from a backup.

Striped Volumes

A striped volume, which

implements RAID Level 0, uses two or more disks and writes data to all

disks at the same rate. By doing so, I/O requests are handled by

multiple spindles, and read/write performance is the beneficiary.

Striped volumes are popular for configurations in which performance and

large storage area are critical, such as computer-aided design (CAD) and

digital media applications.

Note

You

might not experience a performance improvement on IDE unless you use

separate controllers. Separate controllers—ideally, one for each

drive—will improve performance by distributing I/O requests among

controllers as well as among drives. |

Creating a Striped Volume

To create a striped

volume, you must have unallocated space on at least two dynamic disks.

Right-click one of the spaces and choose Create Volume. The New Volume

Wizard will step you through the process of selecting a striped volume

and choosing other disk space to include in the volume. Striped volumes

can be assigned a drive letter and folder paths. They can be formatted

only with NTFS.

Up

to 32 disks can participate in a striped volume. The amount of space

used on each disk in the volume will be equal to the smallest amount of

space on any one disk. For example, if Disk 1 has 200 GB of unallocated

space, and Disk 2 has 120 GB of space, the striped volume can contain,

at most, 240 GB as the size of the stripe on Disk 1 can be no greater

than the size of the stripe on Disk 2. All disk space in the volume is

used for data; there is no space used for fault tolerance.

Recovering a Striped Volume

Because data is

striped over more than one physical disk, performance is enhanced, but

fault tolerance is decreased—there is more risk because if any one drive

in the volume fails, all data on the volume is lost. It is important to

have a backup of striped data. If one or more disks in a striped volume

fails, you must delete the volume, replace the failed disk(s) and

recreate the volume. Then you must restore data from the backup.

Tip

Striped

volumes provide maximum storage and performance but support no fault

tolerance. The only recovery potion is that of your regular backup

routine. |

Mirrored Volumes

A mirrored volume

provides good performance along with excellent fault tolerance. Two

disks participate in a mirrored volume, and all data is written to both

volumes. As with all RAID configurations, use separate controllers (by

adding a controller, you create a configuration called “duplexing”) for

maximum performance. Mirrored volumes relate to RAID-1 hardware

configurations.

Create Mirrored Volumes

To create a mirrored

volume, you must have unallocated space on two dynamic disks.

Right-click one of the spaces and choose Create Volume. The New Volume

Wizard will step you through the process of selecting a mirrored volume

and choosing space on another disk to include in the volume. Mirrored

volumes can be assigned a drive letter and folder paths. Both copies of

the mirror share the same assignment.

You can also mirror an

existing simple volume by right-clicking the volume and choosing Add

Mirror and selecting a drive with sufficient unallocated space.

Once you have

established the mirror, the system begins copying data, sector by

sector. During that time, the volume status is reported as Resynching.

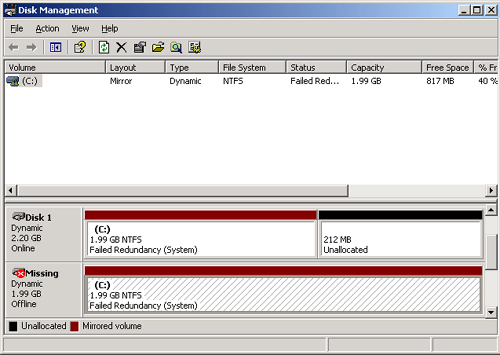

Recovering from Mirrored Disk Failures

The recovery process for

a failed disk within a mirrored volume depends on the type of failure

that occurs. If a disk has experienced transient I/O errors, both

portions of the mirror will show a status of Failed Redundancy. The disk

with the errors will report a status of Offline or Missing, as seen in Figure 1.

After correcting

the cause of the I/O error—perhaps a bad cable connection or power

supply—right-click the volume on the problematic disk and choose

Reactivate Volume or right-click the disk and choose Reactivate Disk.

Reactivating brings the disk or volume back online. The mirror will then

resynchronize automatically.

If you want to stop mirroring, you have three choices, depending on what you want the outcome to be:

Delete the volume

If you delete the volume, the volume and all the information it

contains is removed. The resulting unallocated space is then available

for new volumes.

Remove the mirror

If you remove the mirror, the mirror is broken and the space on one of

the disks becomes unallocated. The other disk maintains a copy of the

data that had been mirrored, but that data is of course no longer

fault-tolerant.

Break the mirror

If you break the mirror, the mirror is broken but both disks maintain

copies of the data. The portion of the mirror that you select when you

choose Break Mirror maintains the original mirrored volume’s drive

letter, shared folders, paging file, and reparse points. The secondary

drive is given the next available drive letter.

Knowing

that information, how do you suppose you would replace a failed disk—a

member of the mirrored volume that simply died? Well, after physically

replacing the disk, you will need to open Disk Management to rescan,

initialize the disk and convert it to dynamic. After all that work you

will find that you can’t remirror a mirrored volume, even though half of

it doesn’t exist. So far as the remaining disk is concerned, the

mirrored volume still exists—its partner in redundancy is just out to

lunch. You must remove the mirror to break the mirror. Right-click the

mirror and choose Remove Mirror. In the Remove Mirror dialog box, it is

important to select the half of the volume that is missing; the volume

you select will be deleted when you click Remove Mirror. The volume you

did not select will become a simple volume. Once the operation is

complete, right-click the healthy, simple volume and choose Add Mirror.

Select the new disk and the mirror will be created again.

Tip

Mirrored

volumes provide fault tolerance and better write performance than

RAID-5 volumes. However, because each disk in the mirror contains a full

copy of the data in the volume, it is the least efficient type of

volume in terms of disk utilization. |

RAID-5 Volumes

A RAID-5 volume uses

three or more physical disks to provide fault tolerance and excellent

read performance while reducing the cost of fault tolerance in terms of

disk capacity. Data is written to all but one disk in a RAID-5. That

volume receives a chunk of data, called parity, which acts as a checksum

and provides fault tolerance for the stripe. The calculation of parity

during a write operation means that RAID-5 is quite intensive on the

server’s processor for a volume that is not read-only. RAID-5 provides

improved read performance, however, as data is retrieved from multiple

spindles simultaneously.

As data in a file is

written to the volume, the parity is distributed among each disk in the

set. But from a storage capacity perspective, the amount of space used

for fault tolerance is the equivalent of the space used by one disk in

the volume.

From a storage capacity

perspective, that makes RAID-5 more economical than mirroring. In a

minimal, three disk RAID-5 volume, one-third of the capacity is used for

parity, as opposed to one-half of a mirrored volume being used for

fault tolerance. Because as many as 32 disks can participate in a RAID-5

volume, you can theoretically configure a fault-tolerant volume which

uses only 1/32 of its capacity to provide fault tolerance for the entire

volume.

Configure RAID-5 Volumes

You need to have

space on at least three dynamic disks to be able to create a RAID-5

volume. Right-click one disk’s unallocated space and choose New Volume.

The New Volume Wizard will step you through selecting a RAID-5 volume

type, and then selecting the disks that will participate in the volume.

The

capacity of the volume is limited to the smallest section of

unallocated space on any one of the volume’s disks. If Disk 2 has 50 GB

of unallocated space, but Disks 3 and 4 have 100 GB of unallocated

space, the stripe can only use 50 GB of space on Disks 3 and 4—the space

used on each disk in the volume is identical. The capacity, or Volume

Size reported by the New Volume Wizard will represent the amount of

space available for data after accounting for parity. To continue our

example, the RAID-5 volume size would be 100 GB—the total capacity minus

the equivalent of one disk’s space for parity.

RAID-5 volumes can be assigned a drive letter or folder paths. They can be formatted only with NTFS.

Because RAID-5 volumes

are created as native dynamic volumes from unallocated space, you cannot

turn any other type of volume into a RAID-5 volume without backing up

that volume’s data and restoring into the new RAID-5 volume.

Recovering a Failed RAID-5 Volume

If a single disk fails

in a RAID-5 volume, data can continue to be accessed. During read

operations, any missing data is regenerated on the fly through a

calculation involving remaining data and parity information. Performance

will be degraded and, of course, if a second drive fails it’s time to

pull out the backup tapes. RAID-5 and mirrored volumes can only sustain a

single drive failure.

If the drive is returned

to service, you may need to rescan, and then you will need to

right-click the volume and choose Reactivate Volume. The system will

then rebuild missing data and the volume will be fully functional again.

If the drive does not

offer a Reactivate option, or if you have had to replace the disk, you

may need to rescan, initialize the disk, convert it to dynamic, then

right-click the volume and choose Repair Volume. You will be asked to

select the disk where the missing volume member should be recreated.

Select the new disk and the system will regenerate the missing data.

Mirrored Volumes versus RAID-5 Volumes

Mirrored volumes

(RAID-1) and RAID-5 volumes provide different levels of fault tolerance.

Deciding which option to implement depends on the level of protection

you require and the cost of hardware. The major differences between

mirrored volumes and RAID-5 volumes are performance and cost. Table 1 describes some differences between software-level RAID-1 and RAID-5.

Table 1. RAID Performance and Costs

| Mirrored Volumes (RAID-1) | Striped Volumes with Parity (RAID-5) |

|---|

| Can protect system or boot partition | Cannot protect system or boot partition |

| Requires two hard disks | Requires a minimum of three hard disks and allows a maximum of 32 hard disks |

| Has a higher cost per MB | Has a lower cost per MB |

| 50 percent redundancy | 33 percent maximum redundancy |

| Has good read and write performance | Has excellent read and moderate write performance |

| Uses less system memory | Requires more system memory |

Creating Fault Tolerance for the System Volume

Because RAID-5 is a

native dynamic volume, it is not possible to install or start the

Windows Server 2003 operating system on a RAID-5 volume created by the

Windows Server 2003 fault-tolerant disk technologies.

Tip

Hardware RAID,

however, is invisible to Windows Server 2003, so the operating system

can (and should, where available) be installed on hardware RAID arrays. |

The only option for

creating fault tolerance for the system, without buying hardware RAID,

is thus to mirror the system volume. You can mirror the system volume by

following the procedures described for creating a mirrored volume:

right-click the system volume and choose Add Mirror. Unlike Windows

2000, you do not need to restart, and the BOOT.INI file is updated

automatically so that you can start to the secondary drive if the

primary drive fails.

If the drives are attached

to IDE controllers, and the primary drive fails, you may have to remove

that drive, change the secondary drive to the primary controller and set

its jumpers or cable position so that it is the master. Otherwise, the

system may not boot to the secondary drive.

Tip

If

you are going to mirror the system volume, do so on one or two SCSI

controllers. If you use two controllers, make sure they are of the same

type. This configuration will be the most easily supported and

recovered. |

There

are two potential “gotchas” when you upgrade disks from previous

versions of Windows, or attempt to move disks to a Windows Server 2003

computer from a computer running a previous version of Windows.

First, if a disk

was configured in a Windows 2000 computer as a basic disk, then was

converted to dynamic, you cannot extend that disk’s simple volumes onto

other disks using Windows Server 2003. In other words, if you move that

disk to a Windows Server 2003 computer, or upgrade the operating system

to Windows Server 2003, you cannot create spanned volumes out of the

disk’s simple volumes.

Second,

Windows Server 2003 no longer supports multidisk arrays created in

Windows NT 4. Windows NT 4 created mirrored, striped, and

striped-with-parity (RAID-5) sets using basic disks. Windows 2000

permitted the use of those disk sets, although it was important to

convert the sets to dynamic quickly in order to facilitate

troubleshooting and recovery. Windows Server 2003 does not recognize the

volumes. On the off chance that you upgrade a server from Windows NT 4

to Windows Server 2003, any RAID sets will no longer be visible. You

must first back up all data prior to upgrading or moving those disks,

and then, after recreating the fault-tolerant sets in Windows Server

2003, restore the data.