1. Networking components

Of

the four main hardware components, network I/O isn't likely to

contribute to SQL Server performance bottlenecks. Tuning disk I/O,

memory, and CPU resources is far more likely to yield bigger performance

increases. That being said, there are a number of important

network-related settings that we need to take into account, such as

maximizing switch speed, building fault tolerance into network cards,

and manually configuring network card settings.

1.1. Gigabit switches

Almost all servers

purchased today come preinstalled with one or more gigabit network

connections offering roughly 10 to 100 times the bandwidth of previous

generations.

The speed of the

network card is only as good as the switch port it's connected to. It's

common for SQL Servers with gigabit network cards to be connected to

100Mbps switch ports. Gigabit switches should be used where possible,

particularly at the server level between database and application

servers.

In smaller networks, it's

not uncommon for hubs to be used instead of switches. Hubs broadcast

traffic to all network nodes. In contrast, switches intelligently route

traffic as required, and should be used to reduce overall network

traffic.

1.2. NIC teaming

To increase network bandwidth and provide fault tolerance at the network level, a technique known as NIC teaming

can be used. NIC teaming involves two or more physical network

interface cards (NICs) used as a single logical NIC. Both cards operate

at the same time to increase bandwidth, and if one fails, the other

continues operating.

Although NIC teaming is a

useful technique from both a performance and a fault tolerance

perspective, there are some known limitations and restrictions regarding

its use in a clustered environment.

1.3. Manually configuring NIC settings

Finally, most network cards offer an autosense

mode that permits self-configuration to match its speed with the

connected switch port speed. It's not uncommon for autosense to get it

wrong, artificially limiting throughput, so NIC settings (speed and

duplex) should be manually configured.

Let's

move from individual server components to the server as a whole,

focusing on the trend toward server consolidation and virtualization.

2. Server consolidation and virtualization

The arrival of personal

computer networks marked a shift away from "big iron" mainframes and

dumb terminals to a decentralized processing model comprised of many

(relatively cheap) servers and lots of personal computers. In many ways,

we've come full circle, with a shift back to a centralized model of

SANs and virtualized servers running on fewer, more powerful servers.

In

recent years, the shift toward server consolidation and virtualization

has gained pace, and in the years ahead, the idea of a dedicated, nonvirtualized

server may seem quite odd. From a SQL Server perspective, this has a

number of important ramifications. Before we look at the considerations

and differences, let's begin by taking a look at the goals of

consolidation and virtualization.

2.1. Goals of consolidation and virtualization

The plummeting

cost and increased power of today's server components has moved the real

cost of modern computing from hardware to people, processes, power, and

space. As such, in a never-ending quest to minimize operating costs,

businesses have embraced consolidation and virtualization techniques.

The major goals are to avoid server sprawl and minimize costs.

Server sprawl

The term server sprawl

is used to describe the uncontrolled growth of servers throughout the

enterprise, making administration very difficult. SQL Server sprawl is a

particularly nasty problem; consider an example of a SQL Server

instance running on a PC sitting under someone's desk, installed by a

DBA who is no longer with the organization and who never documented its

existence. Does this instance need backing up? Does it contain sensitive

data? Do you even know that it exists?

Given the ease

with which new SQL Server installations can be deployed, and the power

of today's commodity PCs, SQL Server sprawl is an all-too-common

problem. While there are tools for discovering the presence of SQL

Server instances, and new SQL Server features such as policy-based

management

that make administration much simpler, the sprawl issue remains a

significant problem, and one of the prime reasons for consolidation and

virtualization projects.

|

The Microsoft Assessment and Planning Toolkit Solution Accelerator

is an excellent (free) tool that can be used in assessing existing

infrastructure. From a SQL Server perspective, one of the great aspects

of this tool is its ability to discover installed SQL Servers on the network, handy for planning upgrades and avoiding out-of-control sprawl situations.

|

Operating costs

Each physical

server consumes space and power, the natural enemies of a data center.

Once the data center is full, expanding or building a new one is a

costly exercise, and one that makes little sense if the existing servers

are running at 50 percent utilization.

As computing power

increases and virtualization software improves, the march toward fewer,

more powerful servers hosting multiple virtual servers and/or more

database instances is an industry trend that shows no sign of slowing

down. The real question for a DBA is often how to consolidate/virtualize rather than whether to consolidate/virtualize. In answering that question, let's look at each technique in turn, beginning with consolidation.

2.2. Consolidation

Although

virtualization can be considered a form of consolidation, in this

section we'll take consolidation to mean installing multiple SQL Server

instances on the one server, or moving multiple databases to the one

instance. Figure 1 shows an example of consolidating a number of database instances onto a failover cluster.

Support of NUMA

hardware, the ability to install multiple instances and cap each one's

memory and CPU, and the introduction of Resource Governor in SQL Server 2008 all contribute to the ability to effectively

consolidate a large number of databases and/or database instances on the

one server.

Just as creating a new

virtual server is simple, installing a new SQL Server instance on an

existing server is also easy, as is migrating a database from one

instance to another. But just because these tasks are simple doesn't

mean you should perform them without thought and planning. Let's take a

look at a number of important consolidation considerations for SQL

Server.

Baseline analysis

From a consolidation perspective, having this data at hand helps you

make sensible decisions on the placement of instances. For example,

consolidating a number of CPU-starved servers on a single CPU core box

doesn't make any sense. In contrast, consolidating servers that consume

very little resources does make sense. Accurate baseline data is a

crucial component in making the right choice as to which servers and/or

databases should be consolidated.

When examining typical

usage as part of a consolidation process, take care to ensure batch

processes are considered. For example, two SQL Server instances may

coexist on the one server perfectly well until the end of the month, at

which point they both run a large end-of-month batch process,

potentially causing each process to exceed the required execution

window.

Administrative considerations

Consolidation brings with

it an even mix of benefits and challenges. We've just covered the

importance of consolidating complementary instances from a performance

perspective. Equally important is considering a number of other

administration aspects:

Maintenance windows—How

long is each server's maintenance window, and will the combination of

their maintenance windows work together on a consolidated server?

Disk growth—Is there enough disk space (and physical disk isolation) to ensure the database growth and backup requirements can be met?

Tempdb

Apart from the ability

to affinitize CPUs and cap memory usage, choosing to install multiple

instances has one distinct advantage over placing multiple databases in

the one instance: each instance has its own tempdb database. Depending

on the databases being consolidated, installing multiple instances

allows more than one tempdb database to be available, enabling the

placement of databases with heavy tempdb requirements in the appropriate

instance.

Let's turn our attention now to virtualization, a specialized form of consolidation.

2.3. Virtualization

Unlike the SQL Server

instance/database consolidation techniques we've outlined so far,

virtualization occurs at a lower level by enabling a single server to be

logically carved up into multiple virtual machines (VMs), or guests.

Each VM shares the physical server's hardware resources but is

otherwise separate with its own operating system and applications.

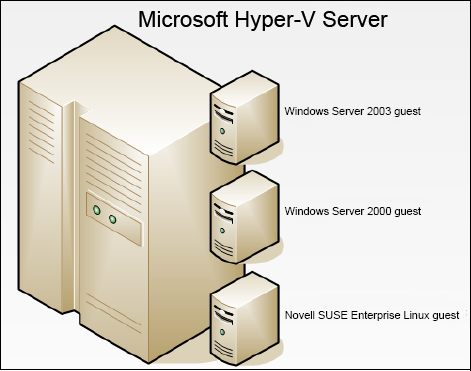

Virtualization platforms, also known as hypervisors, are classified as either Type 1 or Type 2. Type 1 hypervisors, commonly referred to as native or bare-metal hypervisors, run directly on top of the server's hardware. VMware's ESX Server and Microsoft's Hyper-V (see figure 2) are recent examples of Type 1 hypervisors.

Type 2 hypervisors

such as VMWare Workstation and Microsoft's Virtual Server run within an

existing operating system. For example, a laptop running Windows Vista

could have VMware Workstation installed in order to host one or more

guest VMs running various operating systems, such as Windows Server 2008

or Novell SUSE Linux.

Type 1 hypervisors

are typically used in server environments where maximum performance of

guest virtual machines is the prime concern. In contrast, Type 2

hypervisors are typically used in development and testing situations,

enabling a laptop, for example, to host many different guest operating

systems for various purposes.

Let's take a look at some of the pros and cons of virtualization as compared with the consolidation techniques covered above.

Advantages of virtualization

Virtualization offers many unique advantages:

Resource flexibility—Unlike

a dedicated physical server, resources (RAM, CPU, and so forth) in a VM

can be easily increased or decreased, with spare capacity coming from

or returned to the host server. Further, some virtualization solutions

enable VMs to dynamically move to another physical server, thus enabling

large numbers of virtual machines to be balanced across a pool of

physical servers.

Guest operating systems—A single physical server can host many different operating systems and/or different versions of the one operating system.

Ability to convert physical to virtual servers—The

major virtualization products include tools to create a VM based on an

existing physical machine. One of the many advantages of such tools is

the ability to preserve the state of an older legacy server that may not

be required anymore. If required, the converted virtual server can be

powered on, without having to maintain the physical server and the

associated power, cooling, and space requirements while it's not being

used.

Portability and disaster recovery—A

VM can be easily copied from one server to another, perhaps in a

different physical location. Further, various products are available

that specialize in the real-time replication of a VM, including its

applications and data, from one location to another, thus enabling

enhanced disaster-recovery options.

Snapshot/rollback capability—A powerful aspect of some virtualization platforms is the ability to snapshot

a VM for later rollback purposes. At the machine level, this feature

can be considered as backup/restore. It enables you to make changes,

safe in the knowledge that you can restore the snapshot if necessary. An

example is performing an in-place upgrade of SQL Server 2005 to 2008.

Should the upgrade fail, the snapshot can be restored, putting the

system back to its pre-upgrade state.

Despite these clear

advantages, there are a number of issues for consideration before you

decide to virtualize SQL Server environments.

Considerations for virtualizing

SQL

Server

Arguably the single biggest issue for consideration when virtualizing SQL Server is that of support,

particularly for mission-critical production systems. It's not uncommon

to hear of organizations with virtualized SQL Server environments

having difficulty during support incidents due to the presence of a

virtualization platform. A common request during such incidents is to

reproduce the problem in a nonvirtualized environment. Such a request is

usually unrealistic when dealing with a critical 24/7 production SQL

Server environment.

The support issue is

commonly cited as the prime reason for avoiding virtualization in

production environments (and associated volume/load test environments).

Those who take this approach often use virtualization in other less

critical environments, such as development and testing.

Other considerations for SQL Server virtualization include the following:

Scalability—The

maximum resource limitations per VM (which varies depending on the

virtualization platform and version) may present an issue for

high-volume applications that require maximum scalability. In such

cases, using physical servers, with scalability limited only by the

hardware and operating system, may present a more flexible solution.

Performance overhead—Depending

on the hypervisor, a commonly cited figure in terms of the performance

overhead of the virtualization platform is approximately 10 percent.

Baseline analysis—As

with the server consolidation techniques we discussed earlier,

consideration should be given to the profiles of the individual virtual

servers running on the same machine—for example, placing many

CPU-intensive VMs together on a single CPU core host machine.

Licensing—Licensing

can be a tricky and complex area, so I'm not going to discuss the pros

and cons of licensing virtualization. But I do recommend that before

deciding on a server consolidation technique, understand the licensing

implications fully.

Toolset—One

of the things that becomes apparent when troubleshooting a performance

problem on a VM is that in order to get the full picture of what's

happening on the server, you need access to the virtualization toolset

in order to determine the impact from other VMs. Depending on the

organization, access to such tools may or may not be granted to a DBA.

Virtualization

and consolidation techniques are here to stay; it's vitally important

that the pros and cons of each technique are well understood.