SharePoint and Log Shipping

As with SQL Server database backups in general, several types of SharePoint databases cannot

be preserved via SQL Server log shipping. The following list addresses

each type of database and whether it can be made highly available via

transaction log shipping.

Configuration database.

You should not log-ship SharePoint configuration databases;

configuration databases are farm specific. A configuration database is

intended to be used only with the original farm it is attached to.

Search databases.

Because search databases are tightly integrated with the index files

stored on the file system of a SharePoint crawl server, you should not

log-ship them. The time it takes to transfer log files between primary

and secondary servers can result in inconsistencies between

the database and the indices. In the case of a disaster, it is likely a

better option to re-create the indices from scratch or back them up

using the Central Administration site or PowerShell than a SQL Server

backup. If the SharePoint content databases that are being log-shipped

are attached to a standby farm, you can use that farm’s search

components to crawl and index them.

Some Service Application databases.

It is difficult to succinctly identity exactly what databases for

SharePoint 2010 Service Applications cannot be log-shipped because of

the large number of Service Applications available as well as the fact

that the Service Application Framework is extensible and supports the

creation of custom Service Applications. For a good list of what Service

Application databases can and cannot be log-shipped, as well as general

criteria for making the distinction, see http://technet.microsoft.com/en-us/library/ff628971.aspx.

Also, review any documentation available for each Service Application

to determine its specific availability for log shipping or lack thereof.

Content databases. You

can log-ship SharePoint content databases to a secondary server. You

can also attach them to a standby SharePoint farm for limited read-only

viewing, if you restore them in Restore in Standby mode. Depending on

how the standby farm is set up, some functions such as search, user

profiles, and people search may not be available without some extra

configuration efforts.

As you can see in the list, not

every type of SharePoint database is highly available through SQL Server

log shipping. This directly influences how you should use log shipping

to implement HA for your SharePoint farm’s databases, because you can’t

simply switch over to your secondary log-shipped databases if your

primary databases are lost. You can take two approaches when using SQL

Server log shipping with SharePoint: creating standalone secondary

clones of your Service Application and content databases or creating a

full standby SharePoint farm based on your log-shipped Service

Application and content databases. Because you cannot make your

configuration and search databases highly available via log shipping,

you must build a new farm to host the log-shipped Service Application

and content databases to restore your environment to its users.

The first option means that

you are not going to build a new farm until a disaster occurs, but it

does reduce your startup time because the content is preserved in a

separate database host and ready to be reintroduced back into the farm.

If an outage hits a single database, it gives you a running resource to

add back into your farm. The fact that this option takes more time to

use in a recovery scenario does then require that you have greater

leeway in terms of your recovery time objective (RTO) for your

SharePoint farm. The second option allows you to have a full, up-to-date

replacement available for your farm in the case of a catastrophic

event, shortening the time that your environment is unavailable to your

users and allowing you to meet a much smaller RTO window.

Building a standby

SharePoint farm provides a system for the log-shipped Service

Application and content databases to be integrated into and gives you a

fallback option if a disastrous event should befall your primary

production SharePoint farm. It also gives you a read-only environment

where users can view data or run reports without impacting the

performance of your production farm. (Keep in mind that this may

influence how the platforms in your standby farm are licensed.) You can

use the following steps as a guide to build your own standby Share-Point

farm.

Configure log shipping for each database selected to be replicated into the standby farm using the Restore to Standby mode.

Install

SharePoint in the standby farm, using the SQL Server database instance

hosting the log-shipped databases as the database host for the standby

farm.

Note

If

possible, use PowerShell (you can also use VBscript or another

compatible scripting language, but others are not as ... powerful ... as

PowerShell, if you get our drift) to script the installation and

configuration of both your primary and standby SharePoint farms. This

gives you a much higher probability of creating identical deployments in

both environments, which in turn gives you a higher probability of

success for your log-shipping configuration. In general, take special

care to apply the same patches, hotfixes, or updates to the operating

systems, SharePoint, and SQL Server in the standby farm as have been

applied to your production farm. You must build the standby farm to the

same SharePoint version as your production farm. If your production farm

has any custom code or language packs installed, also install them to

the standby farm.

Configure

the standby farm to match the setup of the production farm. If you have a Service Application configured in your

production farm, you must either create a new Service Application to

match it or restore a backup of the Service Application from the

production farm into the standby farm so you can be certain that the

configuration matches exactly. (See the previously referenced document

on Microsoft’s TechNet Web site for information on how to configure

specific Service Applications, as well as the product document for the

Service Application itself.) Although you should conduct a search in the

standby farm, disable any search crawls unless you specifically need

them. Confirm that the standby farm’s MySite configuration matches that

of the production farm’s.

To

build the new standby Web applications for each Web application in your

production farm, adding the log-shipped content databases to each new Web application.

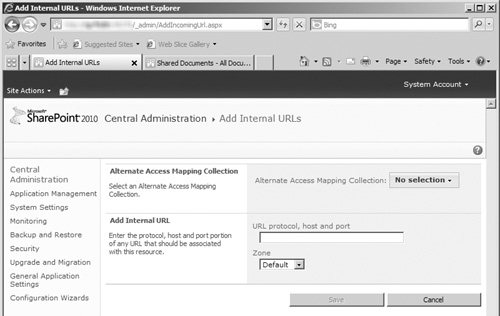

In the standby farm, configure an alternate access mapping (AAM) that points to the URL of your production farm; see Figure 9 for an example of the Add Internal URLs page.

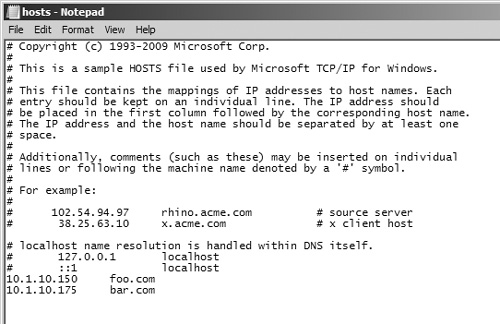

On

the file system of all the Web front-end (WFE) servers in the standby

farm, open the server’s Hosts file (typically located at %WINDIR%\system32\drivers\etc\). See Figure 10

for an example. Then add an entry pointing the production farm’s URL at

the server’s local loopback IP address, 127.0.0.1. This ensures that

any requests for the production farm that originate on the local server

are directed back to the local server, not a server in your production

farm.

If

you are using SharePoint Server 2010, Search Server 2010, or Search

Server 2010 Express, in the standby farm open the Search content source

named “Local Office Server SharePoint Sites” for editing. Remove any

URLs that refer to local servers in the standby farm or the URL of the

standby farm, and replace them with the URL of the production farm.

Tip

Unless

you have a specific need to make search queries in the standby farm,

try to ensure that no crawls are scheduled to run in the farm until it

is needed in the case of a failover. This reduces the resources that the

standby environment uses and makes it easier to configure search for

the proper targets should a failover occur.

Your

standby farm is now ready to be used as a read-only copy of your

production farm that can be failed over to in case of an outage or

disaster.

Caution

Once your standby farm is

created, be careful to duplicate every configuration change or update

that you make to your primary SharePoint farm to your standby farm. If

the two environments are not kept in sync, you risk displaying

inconsistent content to your users or worse, breaking your standby farm

entirely when it attempts to use your modified Service Application and

content databases that are log-shipped into it.

Although using SQL Server

log shipping as your HA solution has several benefits, it also has quite

a few drawbacks that you must consider when evaluating the approach.

This is not to say that log shipping is or is not a good solution. Our

main caution is that you pay close attention to the items that follow

and determine how they relate to your environment, needs, and

limitations. You may find that log shipping fits you like a glove, or

you may find that one of the other HA solutions in this chapter is what

you need to bring long-term stability to your database environment.

Log-Shipping Pros

Log shipping may be the

right HA solution for your environment for a variety of reasons. The

following list outlines its positive attributes. Take a look to see if

it meets your needs.

Independence.

The jobs used to log-ship a database are not tied to SharePoint, nor

are they impacted by any other processes in the SQL Server database

instance. This means that changes to your SharePoint configuration or

its databases do not directly impact or harm your log-shipping

procedures.

Cost effectiveness.

Unlike some other HA solutions (such as clustering), log shipping does

not require high-priced components and (as noted earlier) can be

implemented for the costs that may or may not be associated with

provisioning and licensing an additional SQL Server instance.

Highly configurable nature.

As described earlier, a large number of options and configurations to

be set for log shipping allow it to meet the needs of your environment.

Read-only availability.

If you want, you can create a read-only version of your SharePoint

environment using its log-shipped content databases for research or

reporting purposes to reduce the load placed on your primary farm.

Low impact on performance. Once

the transaction logs of your SharePoint database are backed up, the

log-shipping process is executed on the server(s) hosting the secondary

database and has no affect on the performance of your primary database

server.

Unlimited use.

You can log-ship as many databases in an instance as you want; the

platform imposes no hard limit. (Keep in mind that you may still

encounter limits imposed by the capacity of your hardware or network

infrastructure.)

Use of backups.

The transaction log backups that the log-shipping process uses to

update the secondary database can restore the primary database to a

previous point in time as necessary. This means that you can make your

database highly available and implement a backup/ restore solution for

it at the same time, an option not available with SQL Server’s other HA

solutions. In this situation, it is still necessary to perform full

backups of your transaction logs over time, otherwise restore operations

are going to take much longer to implement all of the differential

backups back to the original full backup, but completely feasible.

Capture completeness.

Because SQL Server records information about a database update to the

database’s transaction log before it even writes it to the database, all

the requested database modifications received by SQL Server leading up

to the moment of an outage are copied over to the secondary server and

written to that database.

Distribution and redundancy.

By requiring a secondary database instance to host your secondary

database, log shipping makes your system more highly available by

providing fallback options for your primary database server. The ability

to ship database logs to multiple secondary database instances means

that you can further limit your risk by increasing the number of

fallback options you have available.

Geographic redundancy.

Log shipping does not face the distance limitations that come with

database mirroring or failover clustering, allowing copies of your

databases to be distributed to remote locations for true redundant

protection of your data from large-scale disasters.

FILESTREAM compatibility.

SharePoint databases configured to use SQL Server’s FILE-STREAM

provider for Remote Binary Large Object (BLOB) Storage (RBS) can be

log-shipped to a standby database instance for preservation. You can

log-ship other third-party RBS providers if the provider supports it.

Log-Shipping Cons

As with most

technology solutions, log shipping in SQL Server 2008 is not a perfect

solution. Review the following list to see where it falls short and how

that might affect your SharePoint environment.

Manual failover.

Out of the box, SQL Server does not automatically fail a system over to

the log-shipped secondary database if the primary database goes down.

Although it is true that log shipping does have a third server role—the

monitor role—that role only tracks the status of

log-shipping operations; it cannot make the log-shipping database

instance a primary if something happens to the original primary

instance. You can do additional configuration to automate this process,

but by default you must manually switch over to the log-shipped

databases. This can impact the time it takes to restore your system

after an outage, depending on how quickly your IT staff is notified of

the outage and what availability they have to restore the system to the

log-shipped databases.

Latency.

Updates are not immediately copied to the secondary database when they

are made in the primary database. Several factors can affect the time it

takes for them to make it over to the secondary database, including

these: the frequency with which your transaction logs are backed up, the

size of those logs, and the bandwidth available between the primary and

secondary databases. The data in your secondary database is not going

to be up to date until the transaction logs are copied to it and

restored, which can impact the content of a standby farm. Because log

shipping does not update in real-time, you cannot use it to restore a

database to the point in time immediately prior to a failure. If your

organization’s recovery point objective (RPO) and RTO requirements for

SharePoint mandate instantaneous failover with no lost transactions or

data, log shipping is not a viable HA solution for your SQL Server

environment.

Poor status visibility.

Although the log-shipping process generates status reports for all its

actions and allows for the configuration of a monitoring server, this

information is not going to be easily available. You can access these

reports only by logging on to the server where they are stored; the

reports only raise alerts to operators of the associated SQL Server

instances when they log into the instances. Additional custom measures

or the use of a monitoring platform such as the Operations Manager

platform from Microsoft is going to be required to make this information

available to your SharePoint administrators or to automatically deliver

the alerts as they occur without requiring administrators to log into a

system.

Not a complete solution.

As previously mentioned, you are not able to log-ship all your

SharePoint databases, requiring additional steps such as building a

whole new farm or creating a standby farm to use the log-shipped

databases in the case of a disaster.

Errors and data loss.

Any errors that are written to your primary databases are also

transferred to your secondary databases via log shipping. Log shipping

is not to prevent the loss of data due to accidental deletion; if it is

deleted in the primary database, it is also deleted in the secondary

database once the transaction is log-shipped over.

If the features and

functionality of log shipping in SQL Server 2008 seem appealing but you

still have concerns about some or all of the drawbacks to the process,

have no fear. There are, however, other alternatives when it comes to HA

for SQL Server, and the next one on the list, database mirroring,

offers several enhancements to log-shipping’s feature set while also

improving on its weaknesses. (Keep in mind that database mirroring comes

with its own set of weaknesses and drawbacks.) Although the two options

are similar, there are definitely some differences between the

two, especially when it comes to the increased cost of implementing

database mirroring. In addition, you can implement both log shipping and

database mirroring for your SharePoint environment, giving you the best

of both worlds.

This isn’t to say that log

shipping is necessarily inferior to the other HA solutions available for

SQL Server: database mirroring or failover clustering. Microsoft has

designed these solutions to offer you a range of flexible and

configurable options to meet your environment’s specific needs, and

log-shipping can play an important role in your disaster recovery

design. Log shipping allows you to meet shorter RTOs than normal SQL

Server backups, because you already have a copy of your databases up and

running in a SQL Server environment, at a much lower cost than

mirroring or clustering thanks to its less expensive infrastructure

requirements. Log shipping also enables you to provide broader

protection of your environment, because logs can be shipped to multiple

locations and to diverse geographic locations. Finally, log shipping is

attractive because you can use it with a much broader range of SQL

Server functionality, such as RBS.