With just about everything involving computers, there

are two stages: planning and implementing. So far, I have discussed

some of the more basic aspects of planning, a fair share of which you

may already be familiar with. The following sections will address some

of the concerns you'll face with topological implementation in the

modern-day workplace—issues relating to where you put the servers and

how you connect them to each other.

The Microsoft

MCITP enterprise administrator exam will test two things: your knowledge

of both design logic and your ability to make the right decision at the

right time. With regard to physical topology decisions, it usually

means choosing the right type of server to be connected at the right

place, running the right services and features. Accordingly, I'll start

with a brief discussion of the physical characteristics of the

environment that you have to consider, then discuss WAN considerations,

and finally discuss specific server roles that require attention by

administrators, such as the global catalog.

1. Restrictions

When an administrator says

that they have a "restriction," it doesn't mean they're bound by the

rules of emissions or dumping waste (although that beast does wander

into our backyards occasionally). Instead, it usually means that

something about the campus design imposes a physical limitation that

hinders the speed of the data. These limitations are usually the result

of geography, obstructions, or inherited design.

1.1. Geography

If parts of your

environment are separated by great distances, you will most likely have a

slow WAN link that connects your offices. Today this is less of a

problem. As of 2008, even home users can purchase 20-megabit up and down

connections for their own personal use. That is an astonishing amount

of data. However, even with the fastest WAN connections, the rule of

thumb when it comes to distance still applies. If it's far away, it's

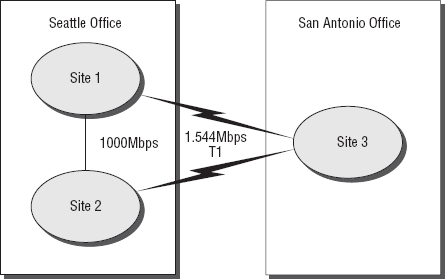

probably going to be slow. Consider Figure 1.

In this example, you have three offices; two are located in the same

building in Seattle, and one is located in San Antonio. Obviously, the

connection in San Antonio is going to be limited by its T1 connection.

1.2. Obstructions

Have you ever been asked to

install a server in one location and connect to another and then been

blocked by a giant wall that is 8-feet thick? If you haven't, you're the

single luckiest administrator on the planet, or you simply haven't been

in IT long enough to know the joys of dealing with the other

kind of architecture. This is usually more of a building administration

problem than an IT problem; however, it's good to note this, because it

is a physical limitation.

1.3. Inherited Design

This is, by far, the most frustrating of all limitations. The dreaded inherited design

occurs when you are trying to either upgrade an environment or reassign

the topology of an environment around a preexisting wiring system or

topology design that has a serious bottleneck.

Here's a simple example of

this: the last network engineer to work on the environment decided it

would be a good idea to use only 100Mb switches instead of gigabit ones.

After all, they're cheaper. But what happens when you have 100 users

trying to access the same file? You're then extremely limited by how

fast the server can communicate. Even though it might be capable of

gigabit (or even 10Gb) performance, you're still stuck at 100Mb.

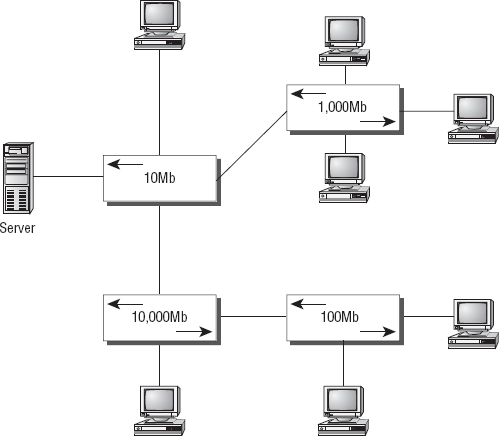

On the exam, this might sneak by you if you're not careful. Consider Figure 2,

which shows a simple campus design. If you aren't paying close

attention, it looks like a simple star topology design. However, the

bottleneck of this entire environment is a relatively ancient switch.

This small element can affect the entire network.

2. Placing Domain Controllers

The domain controller is the

heart of your entire organization. It's the main location from which

your users log in, and it also usually contains a copy of the global

catalog, operations master, or other vital information that makes the

domain controller not only the heart of your organization but several

other very important organs as well.

In choosing the location for a domain controller, you should keep in mind several criteria:

Security

Security is your

primary concern. Since a domain controller contains so much information,

you have to make sure it is not just secure regarding software but also

is physically secure. Generally, this means you want to make sure the

domain controller is in a safe location, such as a data center, and that

a hostile intruder couldn't easily compromise your server.

Accessibility

In terms of

accessibility, you want to make sure this server can be accessed by your

staff in terms of administration but also possesses adequate remote

accessibility. Can it easily be accessed by all administrators? Are

there any conflicts with the firewall or the router?

Reliability

Reliability by now is

probably a given. Best practices for domain controllers indicate you

should always have at least one backup domain controller in an

environment, and the backup controller should be ready to take over at a

moment's notice. Performance, likewise, is very important. Microsoft

likes to test candidates on the specific hardware requirements of

Windows Server 2008 in more ways than one. In addition to requiring you

to know the basic requirements, they want you to know how much memory

and disk space a domain controller will require based on the number of

users. Table 1 breaks memory usage down into an easy-to-read format, and Table 2 shows how to calculate the recommended disk space, according to Microsoft.

Table 1. Domain Controller Memory Requirements

| Users per Domain Controller | Memory Requirements |

|---|

| 1–499 | 512MB |

| 500–999 | 1GB |

| More than 1,000 | 2GB |

Table 2. Domain Controller Space Requirements

| Server Requirement | Space Requirement |

|---|

| Active Directory transaction logs | 500MB |

| Sysvol share | 500MB |

| Windows Server 2008 operating system | 1.5 to 2GB; 2GB recommended |

| Intervals of 1,000 users | 0.4GB per 1,000 users for the drive with NTDS.dit |

You will have to plan for the

number of domain controllers your environment will require according to

the number of users, as shown in Table 3.

Table 3. Domain Controller Processor Requirements

| Number of Users | Processor Requirements |

|---|

| 1–499 | One single processor |

| 500–999 | One dual processor |

| 1,000–2,999 | Two dual processors |

| 3,000–10,000 | Two quad processors |

3. The Global Catalog

As you may remember from the 70-640 MCTS Windows Server 2008 Active Directory exam, a global catalog server is a server that contains a master list of all the objects in a domain or forest. The global catalog

itself is the master list, and it is transmitted across servers for the

purpose of informing individual machines throughout the environment of

what objects actually exist and, more importantly, where they can be

found. The Sybex 70-640 book Windows Server 2008 Active Directory Configuration Study Guide calls this list the "universal phone book" of Active Directory. Not only is that pretty clever, it's also very accurate.

The global catalog serves

two more functions. First, it enables users to log on because it

informs a domain controller of the universal group membership of the

rest of the servers. Second, it resolves user principal names of which a

particular domain controller may not be aware.

3.1. Global Catalog Server Locations

Deciding which server is

going to contain your global catalog is one of the most important

decisions you will make when you are beginning to design a network.

Depending on its location, it can directly affect the speed of your site

replication, the amount of time your servers spend updating themselves

with the latest objects, and how quickly the rest of the environment

becomes aware of changes.

By default, the first tree

(domain) in a forest is always a global catalog server. This is because

if a forest didn't have a copy of the global catalog, it really wouldn't

achieve much, because no credentials would be cached and it wouldn't

have a list of what user accounts existed. Beyond the initial global

catalog servers, here are a couple of other reasons you might want to

add a global catalog server: users of custom applications, unavailable

WAN links, and roaming users.

4. Operations Master Location

Just like the global

catalog server location, the operations master location is one of the

most important design decisions you will have to make when creating your

network infrastructure. However, unlike the global catalog server, the

operations master server is broken down into five separate roles that

have to be considered.

4.1. Schema Master

If you have a choice in the

matter, the best decision for the schema master is to use it as little

as possible. Modifying the schema isn't something you want to do very

often, because it tends to be very heavy handed and can cause a lot of

problems if you aren't careful. When placing the schema master, the main

thing you have to keep in mind is the location of your schema

administrators. They will be the sole benefactors of this location, and

therefore you need to plan accordingly.

4.2. Domain Naming Master

In the old days of

computing (the Windows 2000 era), the domain naming master was always

placed on the global catalog server. With Windows Server 2008, this is

no longer a requirement, but it's still considered a very good practice.

The domain naming master is responsible for making sure that every

domain is uniquely named and usually communicates when domains are added

and then removed.

4.3. Relative Identifier Master (RID Master)

If you'll recall from your

earlier study, the relative identifier (RID) and security identifier

(SID) are used to distinguish uniqueness within Active Directory.

Whenever an account or group of accounts is created, the RID will have

to be contacted. Therefore, it's a good design practice to place the RID

in an area that has access to domain controllers and can be easily

communicated with whenever an administrator needs to make a new account.

4.4. Infrastructure Master

You can think of the

infrastructure master in your environment as the person who makes sure

everyone has everything named right. The best example of this is when

someone gets married or has their name legally changed. Say a user

changes her name from Maria Dammen to Maria Anderson. If she has her

name changed in domain A, there is a chance that domain B may not be

aware of this change and may need to be informed about it. Accordingly,

something in the infrastructure has to search through everything

(including network entities as well as users) and check the names and

consistency.

The primary rule for

placing an infrastructure master is to not place it on any machine that

is a global catalog server. This is because when the global catalog

server checks with other domains, it will not notice the inconsistencies

between the two domains, because it's already aware of what they should

be. Instead, you should try to place a domain controller in an area

that is not a global catalog server but has access to a domain

controller from another domain.

4.5. Primary Domain Controller

Maybe it's just a holdover from the days of old, but to this day when certain administrators hear the phrases primary domain controller and backup domain controller

from their friend Windows NT 4, they shiver a little bit. This is

because the world of Windows administration was nowhere near as friendly

in the early days as it is now. You don't necessarily need a lecture on

how things used to be, though. Instead, as a Windows Server 2008

administrator, you just need to know how to handle old equipment.

The only reason to use this

role is if you have a machine running Windows NT 4 that doesn't

understand Active Directory. In this situation, the primary domain

controller can ensure that all older machines can change passwords as

Windows Server 2008 emulates the older authentication process.

Additionally, a primary domain controller makes sure that all machines

with pre–Active Directory installations can keep their times

synchronized.