Although DAGs are the headline feature for

Exchange, you need to take steps to ensure that servers offering other Exchange

roles, such as the Hub Transport, Client Access, and Edge Transport servers, will

also be available to the Exchange organization in the event that a server suffers

complete failure. Having a

Mailbox server in a site also requires that you have a Client Access server and a

Hub Transport server in the same site. Even if you have a DAG deployed, you will

still need other server roles to be highly available if you want to ensure that

messages flow in the event of server failure. In this lesson, you will learn what

steps you need to take to make Client Access servers, Hub Transport servers, and

Edge Transport servers highly available.

1. Configuring Network Load Balancing

Client Access servers and Edge Transport servers can leverage network load

balancing (NLB) as a part of their high-availability strategy. NLB distributes

traffic between multiple hosts based on each host’s current load. Each new

client is directed to the host under the least load. It is also possible to

configure NLB to send traffic proportionally to hosts within the cluster. For

example, in a cluster with four hosts, you could configure an NLB cluster to

send 40 percent of incoming traffic to one host and split the remaining 60

percent across the other three hosts. When considering high availability for

Client Access servers and Edge Transport servers, you have the option of using

the NLB feature available in Windows Server 2008 and Windows Server 2008 R2. All

editions of Windows Server 2008 and Windows Server 2008 R2 support NLB.

You can add and remove nodes to NLB clusters easily by using the Network Load

Balancing Manager console. NLB clusters reconfigure themselves automatically

when you add a new node or remove a node or a node in the cluster fails. Each

node in an NLB cluster sends a message to all other nodes after a second,

informing them of its status. The term for this message is

“heartbeat.” When a node fails to transmit five consecutive

heartbeat messages, the other nodes in the cluster alter the configuration of

the cluster, excluding the failed node. The term for the reconfiguration process

is “convergence.” Convergence also occurs when the heartbeat of a

previously absent node is again detected by other nodes in the cluster. You can

take an existing node in an NLB cluster offline for maintenance and then return

it to service without having to reconfigure the cluster manually because the

removal and addition process occurs automatically.

You cannot configure a Client Access server

that also hosts a DAG to be a part of a Windows NLB cluster, as you cannot use

both NLB and Windows Failover Clustering concurrently. You must install the NLB

feature on each node before creating an NLB cluster. NLB detects server failure

but not application failure, so it is possible that clients can be directed to a

node on which a Client Access server component has failed.

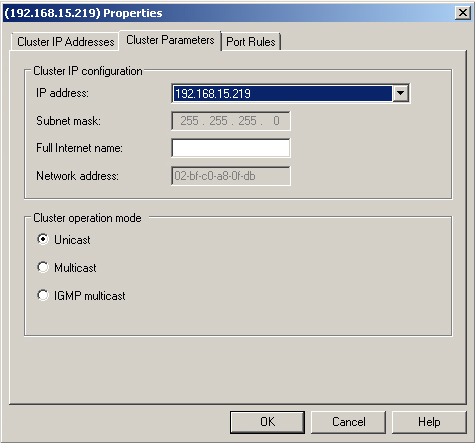

1.1. Configuring NLB Cluster Operation Mode

The cluster operation mode determines how you configure the

cluster’s network address and how that address relates to the existing

network adapter addresses. You can configure the operation mode of an NLB

cluster by editing the cluster properties, as shown in Figure 1. All nodes within a cluster must use

the same cluster operations mode. This tab also displays the virtual MAC

address assigned to the cluster by using this dialog box.

The cluster operations modes—and the differences between

them—are as follows:

Unicast Mode

When an NLB cluster is configured to work in the unicast

cluster operation mode, all nodes in the cluster use the MAC

address assigned to the virtual network adapter. NLB substitutes

the cluster MAC address for the physical MAC address of a

network card. If your network adapter does not support this

substitution, you must replace it. When nodes in a cluster have

only a single network card, this limits communication between

nodes but does not pose a problem for hosts outside the cluster.

Unicast mode works better when each node in the NLB cluster has

two network adapters. The network adapter assigned the virtual

MAC address is used with the cluster; the second network adapter

facilitates management and internode communication. Use two

network adapters if you choose unicast mode and use one node to

manage others.

Multicast Mode

Multicast mode is a

suitable solution when each node in the cluster has a single

network adapter. The cluster MAC address is a multicast address.

The cluster IP address resolves to the multicast MAC address.

Each node in the cluster can use its network adapter’s MAC

address for management and internode communication. You can use

multicast mode only if your network hardware supports multicast

MAC addressing.

IGMP Multicast

Mode

This version of multicast uses Internet Group Membership

Protocol (IGMP) for communication, which improves network

traffic because traffic for an NLB cluster passes only to those

switch ports the cluster uses, not to all switch ports. The

properties of IGMP multicast mode are otherwise identical to

those of multicast mode.

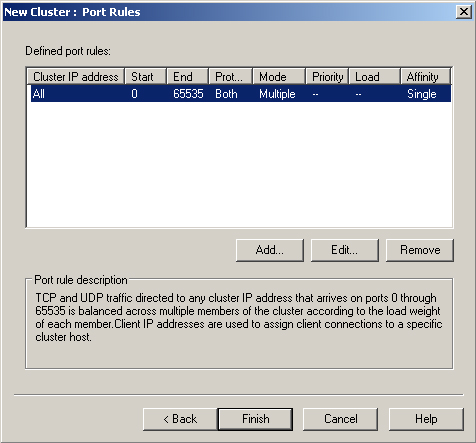

1.2. Configuring NLB Port Rules

Port rules, shown in Figure 2, control, on a

port-by-port basis, how network traffic is treated by an NLB cluster. By

default, the cluster balances all traffic received on the cluster IP address

across all nodes. You can modify this so that only specific traffic,

designated by port, received on the cluster IP address is balanced. The

cluster drops any traffic that does not match a port rule. You can also

configure the cluster to forward traffic to a specific node rather than to

all nodes, enabling the cluster to balance some traffic but not all traffic.

You accomplish this by configuring the port rule’s filtering mode. The

options are multiple host or single host.

When you configure a rule to use the multiple host filtering mode, you can

also configure the rule’s affinity property. The affinity property

determines where the cluster will send subsequent client traffic after the

initial client request. If you set the affinity property to Single, the

cluster will tie all client traffic during a session to a single node. The

default port rule, shown in Figure 3, uses the

Single affinity setting. When you set a rule’s affinity property to

None, the cluster will not bind a client session to any particular node.

When you set a

rule’s affinity property to Network, a client session will be directed

to cluster nodes located on a specific TCP/IP subnet. It is not necessary to

configure the affinity for a single host rule because that rule already ties

traffic to a single node in the cluster.

You can edit the load placed on each node by editing port rules on each

node of the cluster. Editing the load changes the load from balanced between

all nodes to preferring one node or several nodes over other nodes. Do this

when the hardware or one or more nodes have greater capacity than other

nodes. You configure port rules in the practice at the end of this

lesson.

When you need to perform maintenance on a node in an NLB cluster, you can

use the Drain function to stop new connections to the node without

disrupting existing connections. When all existing connections have

finished, you can then take the cluster offline for maintenance. You can

drain a node by right-clicking it from within Network Load Balancing

Manager, clicking Control Ports, and then clicking Drain.

2. Client Access Arrays

Client access arrays, sometimes called client access server arrays, are

collections of load-balanced Client Access servers. If one Client Access server

in a client access array fails, client traffic will automatically be redirected

to other Client Access servers in the array. Client access arrays work on a

per-site basis. A single client access array cannot span multiple sites. Client

access arrays can use Windows NLB or a hardware NLB solution. If you are using a

Windows NLB, you will be limited to eight nodes in the array and will not be

able to also configure the server hosting the Client Access server role as a

part of a DAG.

To create a client access array, perform

the following general steps:

Configure load balancing for your Client Access servers. You can use

Windows NLB or a hardware NLB solution. Ensure that your load-balancing

array balances TCP port 135 and UDP and TCP ports 6005 through

65535.

Configure a new DNS record that points to the virtual IP address that

you will use for the client access array.

Use the New-ClientAccessArray cmdlet to create

the client access array. For example, if you created a DNS record for

casarray.adatum.com and you have configured load balancing for Client

Access servers in the Wangaratta site, use the following command to

create a client access array:

New-ClientAccessArray -Name 'Wangaratta Array' -Fqdn 'casarray.adatum.com' -Site

'Wangaratta'

Configure existing mailbox databases in the site to use the new CAS

array with the Set-MailboxDatabase cmdlet and the

RpcClientAccessServer parameter. For example, to configure MBX-DB-1 to

use casarray.adatum.com, issue the following command:

Set-MailboxDatabase MBX-DB-1 -RpcClientAccessServer 'casarray.adatum.com'

3. Transport Server High Availability

To ensure that Hub Transport servers are highly available, deploy multiple Hub

Transport servers in each site. Deploying multiple Hub Transport servers

provides server redundancy, as messages will automatically reroute in the event

that a Hub Transport server fails. When you deploy an extra Hub Transport server

on a site, you do not need to perform any additional configuration, as

configuration data automatically replicates through Active Directory.

There are two methods through which you can make Edge Transport servers highly

available. You can load-balance Edge Transport servers using NLB, or you can

configure multiple MX records in the external DNS namespace.

As Windows NLB requires that hosts be members of the same Active Directory

domain and that you deploy Edge Transport servers on perimeter networks, most

Edge Transport server load-balancing solutions use hardware load balancing. You

may need to use a NLB solution if you have multiple Edge Transport servers but

have only one public IPv4 address available for incoming Simple Mail Transfer

Protocol (SMTP) traffic. In this situation, you would assign the public IPv4

address as the NLB virtual address, allowing requests to be spread across Edge

Transport servers with private IP addresses on the perimeter network.

Configuring multiple MX records in the external DNS zone uses the SMTP

protocol’s natural high-availability features. When an external SMTP

server needs to send a message to a specific mail domain, it runs a query

against the target domain’s zone looking for MX records. If the SMTP

server is unable to deliver mail to the first address returned by the MX record

query, the SMTP server then attempts delivery to other addresses returned by the

query.

Note:

Remember that you need to add additional Hub Transport servers to a site

only to provide high availability; it is not necessary to configure

NLB.