For as long as content-centric websites have been

around, the need for searching the content has been there. Many of the

most successful dot-com businesses have been search sites such as Yahoo!

and Google. Every few months a new search site opens its doors, many of

which perform aggregate searches of multiple sites simultaneously. At

the other end of the spectrum, many site owners require a search

capability that returns only results for their specific site.

Microsoft Content Management

Server (MCMS), while a very robust content management solution, does not

offer any search capabilities out of the box. However, just because you

have an MCMS website doesn’t mean you are stuck without search

capabilities.

MCMS Search Options

There are quite a few ways to

implement searching on an MCMS website, each with varying costs,

implementation complexity, and limitations. As usual, each option has

its advantages and disadvantages. Google (http://www.google.com)

provides a Web Service API for you to submit queries against at no

cost, but you are limited to 1,000 searches per day, and there are some

licensing requirements regarding logo placement. Coveo (http://www.coveo.com)

provides a free, no-expiration license for its Enterprise Search

product, but it’s limited to searching 5,000 documents (searching more

than 5,000 requires a license to be purchased from Coveo). Mondosoft’s (http://www.mondosoft.com) MondoSearch seamlessly integrates into MCMS and offers up a robust feature set, but it’s not free.

Microsoft’s enterprise

portal solution, SharePoint Portal Server 2003 (SPS), contains a

powerful and customizable search engine. The indexes SPS creates are

accessible for searches by submitting a Microsoft SQL Full-Text query

via a Web Service. If your organization has already implemented, or

plans to deploy SPS, then you could leverage it as your MCMS search

engine. Do be aware, however, that if your site is publicly accessible,

this solution may not be as compelling, as a SharePoint External

Connector license would be required. For this reason, the SharePoint

search solution we look at is typically only a viable option for

intranet-based MCMS sites.

Microsoft SharePoint Portal Server Search

To fully leverage

SharePoint Portal Server Search to your advantage, you need to

understand how it works and how to configure it. Before we explain how

it works, there are a few key components that need to be understood:

A content source

contains the information that will be indexed. Content sources can be

external websites, file shares, Windows SharePoint Services sites,

Microsoft Exchange public folders, or other systems that provide a

protocol handler for SharePoint Search such as Lotus Notes.

Index files

contain crawled content from one or more content sources. Aggregating

and cataloging content from disparate content sources enables future

search queries to be much more efficient. Index files can also be copied

or propagated to SharePoint Web servers for more efficient searching.

Two indexes are created by default when you create a new portal: Portal_Content and Non_Portal_Content. As expected, the former contains all content stored in the portal while the latter contains content outside of the portal.

Search scopes

are used to provide a logical grouping of content sources for end users

to search. For example, a company may have multiple internal file

shares and websites. An employee looking for a specific document doesn’t

care if it’s in site A or file share B, they just know it’s out there.

An administrator can create multiple content sources and group them

together in a single search scope that the user can search against. In

addition, search scopes can be configured to only include specific

portions of a website, providing even more granular control over what

content is indexed and searchable by your users.

The SharePoint gatherer

is responsible for crawling all content sources, extracting content,

removing noise words (such as ‘and’, ‘a’, ‘the’, ‘or’ to name only a

few... noise word files are customizable so you can add your own noise

words), and creating index files that will be used when search queries

are executed.

The gatherer is

part of the MSSearch service that performs the content crawling and

creates the index files. This service runs on schedules that you can

configure through the SharePoint Central Administration tool. The

MSSearch service activates the gatherer, based on the specified

scheduled timetable, which generates a master index for search queries.

An end user uses a search

scope to select a collection of content sources to query. SharePoint

looks at the catalog containing the content sources and determines the

best candidates that match the search query.

Preparing the MCMS Site for Indexing

Before

we can configure SharePoint to index our MCMS site, there are a few

steps we need to take to make the indexing more efficient and useful.

First and foremost, check if your site has the MCMS option Map Channel Names to Host Header Names

set. If so, you’ll need to disable it because one of the two options we

have, utilizing the MCMS Connector, does not support host header names.

Second, we’ll configure our

site for guest access. The majority of our Tropical Green site is

intended to be available to any anonymous visitor. While we do have one

restricted section of our site, we will set up a new account that will

have read access to our entire site for use by SharePoint as it crawls

our site. Then we’ll filter the results to ensure that the user running

the search will only see items in the search results he or she has

access to.

Next, we need to address how

MCMS and output caching behave on requests for postings. The default

page rendering behavior of MCMS is not performance-friendly to SPS

searching. Because all MCMS requests return an HTTP status code of 200,

SharePoint will always perform full crawls of our site and not an

incremental crawl.

Finally, we’ll add a

control, supplied with the MCMS Connector for SharePoint Technologies,

to our templates that makes additional metadata properties available to

the index crawler, giving additional information for users searching our

site.

Disabling Channel Names to Host Header Names Mapping

The search controls shipped with MCMS Connector

do not support the host header mapping feature and therefore, we cannot

enable mapping the channel names to the host header names. If your site

employs this option, you’ll need to disable it. In addition, we should

rename the top level channel www.tropicalgreen.net to TropicalGreen which is much more convenient as this will now become part of the path in the URL.

|

The MCMS Connector for

SharePoint Technologies requires the .NET Framework 1.1. It will not

function properly on a site running version 1.0 of the .NET Framework.

|

This

change may cause some User Controls in our site to throw errors as they

reference a channel path that no longer exists. Check the following

files to make sure any references to /Channels/www.tropicalgreen.net/ are changed to /Channels/TropicalGreen/:

/Login.aspx

/UserControls/RightMenu.aspx

/UserControls/SiteMapTree.aspx

/UserControls/TopMenu.aspx

You’ll probably want to add

an additional file in the root of our website that automatically

redirects users to our site’s channel. Call the file default.aspx, and it should contain the following line:

<% Response.Redirect("/TropicalGreen/") %>

Any requests for http://www.tropicalgreen.net will now be redirected to http://www.tropicalgreen.net/TropicalGreen/.

If your solution

requires the Map Channel Names to Host Header Names feature, the MCMS

Connector search solution will not be appropriate for your needs.

Assigning a Search Account

Our Tropical Green

site has both a public section of the site and a members-only section.

If an anonymous user, or guest, executes a search, they should only see

results from the public portion of the site. However, if an

authenticated user executes a search query, they should see appropriate

results from both the public and private portions of the site.

In order for

SharePoint to index our entire site, including the members-only section,

we need to create a new account that will have access to the entire

site. We’ll then configure SharePoint to use this account when indexing.

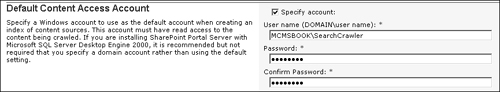

Let’s assume we have an account already created called MCMSBOOK\SearchCrawler. The first thing we need to do is configure SharePoint Portal Server to use this account when crawling content.

1. | Start the SharePoint Central Administration by pointing to Start | All Programs | SharePoint Portal Server | SharePoint Central Administration.

|

2. | Under the section Server Configuration, click the Configure Server Farm Account Settings link.

|

3. | Enter the search crawler account credentials in the Default Content Access Account section and click OK.

|

Now we need to grant our SearchCrawler account subscriber rights to the entire Tropical Green website.

|

We’re going to assume

you have already installed the MCMS Connector for SharePoint

Technologies as its installer creates an MCMS Search subscriber group in

Site Manager for use in searching your MCMS channel structure.

|

1. | Start Site Manager by pointing to Start | All Programs | Microsoft Content Management Server | Site Manager.

|

2. | Select the User Roles button on the left panel within Site Manager.

|

3. | Select the Subscribers user role.

|

4. | Then right-click the MCMS Search User Subscribers role and select Properties.

|

5. | Click the Group Rights

tab to view all the channels, templates, and resources the MCMS Search

User role has rights to. All channels, templates, and resources should

be checked.

|

6. | Click the Group Members tab and click the Modify button.

|

7. | Enter the MCMSBOOK\SearchCrawler user that we added above as the SharePoint crawl account and click OK.

|

8. | Click OK again to close the property window.

|

We have now configured

SharePoint to crawl our site using the dedicated account and granted the

account access to all content within the Tropical Green site.

Enable Guest Access for Tropical Green

Because our site will be

publicly available, we need to make sure that it’s not going to require

visitors to log in. To configure our Tropical Green site to allow guests

to view it, we need to enable guest access.

|

If you have already configured your site for guest access, you can skip this step.

|

In order to allow guests into our site, we need to:

1. | Create a new MCMS Guest Account in the domain or as a local user on the server.

|

2. | Using the SCA, configure MCMS to allow guests and to use the account created in step 1 as the Guest Login Account.

|

3. | Now

that MCMS is configured to allow guests into the site, add the account

created in step 1 to a subscribers’ rights group and grant the rights

group access to all channels, resource galleries, and template galleries

that currently exist in the site, except for the Members channel

located in the Gardens channel.

|

|

We’ve chosen to

enable guest access to the site for simplicity. However, it is possible

for SharePoint to index our site using Forms or Windows Authentication.

For Forms Authentication, we would need to create a special home page

that automatically logs the user in with a predefined account to gain

access to the site so that SharePoint could start its crawl. We would

have to grant the SharePoint crawler account permission to the

appropriate subscriber rights group.

If you chose to create

an alternate home page to automatically log SharePoint into your site,

keep in mind that any user could use this page to gain access to your

site. Special care should be taken if you use this method, such as

adding IP restriction to this page so that only the SharePoint server

can access it.

|

Output Caching and Last-Modified Dates of Postings

ASP.NET is not particularly

sophisticated when it comes to generating HTTP status codes, simply

returning an HTTP code of 200 (OK) for every request rather than sending

a Last-Modified HTTP header.

When SharePoint Portal Server performs an incremental index crawl, it

sends HTTP GET requests for every page on the site it finds. If a page

has previously been indexed and it returned a Last-Modified header, SharePoint Portal Server sends a conditional HTTP GET request that includes an If-Modified-Since HTTP header with the date previously returned in the Last-Modified

HTTP header. If the response is an HTTP status code 304 (not modified),

SharePoint will not index the page again. However, because ASP.NET

always returns status code 200, the site will never be incrementally

crawled by SPS, and will effectively undergo a full index every time the

gatherer is executed. As MCMS templates files are actually a special

kind of ASP.NET Web Form, this also affects postings based on these

template files.

One thing to consider

is channel rendering scripts and postings that contain dynamic lists of

links to other postings. While these scripts and postings may not have

changed since the last index, the content generated by these scripts can

change between different calls to the posting. If this content is

content you wish to search for, you should ensure that postings

containing such controls are always indexed by not returning a Last-Modified HTTP header.

The Connector SearchMetaTagGenerator Control

The last modification we need to do is to add a control that ships with MCMS Connector for SharePoint Technologies. The SearchMetaTagGenerator

outputs standard and/or custom page properties. In addition, we can use

it to control what properties are output and even add our own custom

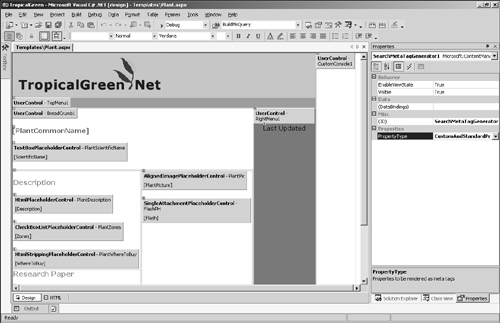

properties. Adding the SearchMetaTagGenerator control to your templates is very easy. Let’s add it to our Plant.aspx template:

1. | Open the Plant.aspx file in Design view.

|

2. | In the Toolbox, select the Content

Management

Server tab, and drag the SearchMetaTagGenerator to the top of our template.

|

If you don’t see the SearchMetaTagGenerator in the Toolbox and you’ve installed the MCMS Connector for SharePoint Technologies, right-click on the Toolbox and select Add/Remove Items. In the Customize ToolBox dialog, click Browse, navigate to the Microsoft

ContentManagement

Server\Server\bin\ directory, and select the Microsoft.ContentManagement.SharePoint.WebControls.dll assembly. Finally click OK in the Customize ToolBox dialog. You should now see the additional controls in your Toolbox.

|

|

3. | Click the SearchMetaTagGenerator control we just added, and in the property window, select one of the following PropertyTypes:

- CustomProperties: Generates META tags for custom page properties.

- StandardProperties: Generates META tags for standard page properties (such as DisplayName, DisplayPath).

- CustomAndStandardProperties: Generates META tags for both custom and standard page properties (default).

- PropertiesFromXMLFile: Generates META tags for the properties specified in the SearchPropertyCollection.xml file. More on this in just a moment.

|

4. | For now, let’s chose the CustomAndStandardProperties property type.

|

5. | Because the Visual Studio .NET designer won’t allow us to drop controls into the <head></head> portion of the page, we need to move the code declaration of the SearchMetaTagGenerator control from the body of the page to the heading. Switch to HTML view, find the SearchMetaTagGenerator control we just added, and move it up between the <head> and </head> tags.

|

6. | As with any changes, we should now rebuild the Tropical Green project.

|

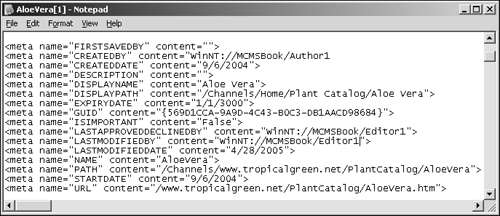

7. | Open

a browser, and navigate through the site to a plant posting in the

plant catalog section of the site. Take a moment to view the source of

the posting you navigate to. Notice all the extra META tags that have been added. Here’s an example:

|

|

Notice the FIRSTSAVEDBY

property listed at the top of the META tags. This is a custom property

that has been added to the posting. It is added to the META tags because

we selected the CustomAndStandardProperties property type in the SearchMetaTagGenerator control. The other META tags are the standard properties generated by the SearchMetaTagGenerator control.

|

When rendered, any posting implemented with the Plant template will contain META tags in the <HEAD> portion of the page for each of the page’s custom properties and standard properties.

One of the items available to us in the PropertyType field is PropertiesFromXMLFile. This option allows us to specify exactly which properties will be exported as META tags using an XML file located at Microsoft Content Management

Server\Server\IIS_CMS\WssIntegration\SearchPropertyCollection.xml.

Once you have

specified which properties you want to use, including custom properties

you’ve added, you need to tell SharePoint to index these properties in

the crawl. The console application SearchPropertiesSetup.exe included with MCMS Connector will tell SharePoint about the updated XML file. Run it using the following syntax:

SearchPropertiesSetup.exe –file "<path to file>\SearchPropertyCollection.xml"

|

The SearchPropertiesSetup.exe utility can be found in the following location: <install drive>:\Program Files\MCMS 2002 Connector for SharePoint Technologies\WSS\bin\.

|

Go ahead and execute the SearchPropertiesSetup.exe utility as above because our custom search solution will use one of the META tags it generates.

|

If you change the SearchPropertyCollection.xml file, you will need to re-execute the SearchPropertiesSetup.exe utility.

|

The MCMS Connector for

SharePoint Technologies includes a help file with instructions on how to

modify the XML file. Be aware that a Microsoft Support Knowledge Base

article exists addressing an error in the help file instructions. The

MSKB article A problem

occurs when you add the SearchMetaTagGenerator control to a template in

Content Management Server 2002 Connector for SharePoint Technologies (#872932) contains corrected instructions.

Our Tropical Green site is

now configured to allow guests to visit the site, our templates have

been modified to be more SPS search friendly, and we have included

additional metadata in the <HEAD> section of all our rendered postings. Let’s proceed to create a content source in SharePoint to index our site.