2. Distributing load over multiple controllers

Storage

controller cards, along with various other components, act as

intermediaries between the physical disks and the software requesting

the data on the disks. Like other storage components, disk controllers

have a maximum throughput capacity and are subject to failure. When you

design a storage system for SQL Server, storage controller cards play a

pivotal role from both performance and fault tolerance perspectives.

A guiding principle

in achieving the best possible storage performance for SQL Server is to

stripe data across many disks. With multiple disks, or spindles, in

action, the speed of a read or write operation is faster than what could

be achieved with a single disk. Striping data across multiple disks

also reduces the speed at which disk queues build. With more disks in

action, the likelihood of a queue building for any single disk is

reduced.

When large numbers of

disks are used, the storage bottleneck begins to move from the disks to

the storage controllers that coordinate the disk reads and writes. More

disks require more storage controllers to avoid I/O bottlenecks. The

ratio of disks to controllers is determined by various factors,

including the nature of the I/O and the speed and bandwidth of the

individual components. We discussed a technique for estimating disk and

controller numbers in the previous chapter.

I/O performance

When choosing a

server, pay attention to the server's I/O capacity, measured by the

amount of supported PCI slots and bus type. Modern servers use the PCI

Express (PCI-E) bus, which is capable of transmitting up to 250MB/second

per lane. An

x4 PCI Express slot has four lanes, x8 has eight lanes, and so forth. A

good server selection for SQL Server systems is one that supports

multiple PCI-E slots. As an example, the HP ProLiant DL585 G2 has seven

PCI-E slots comprised of 3×8 slots and 4×4 slots for a total of 40

lanes. Such a server could support up to seven controller cards driving a

very high number of disks.

Multipath for performance and tolerance

Depending on the

storage system, a large number of components are involved in the I/O

path between the server and the disks. Disk controllers, cabling, and

switches all play a part in connecting the disks to the server. Without

redundancy built into each of these components, failure in any one

component can cause a complete I/O failure.

Redundancy at the disk

level is provided by way of RAID disks, as you learned in the previous

chapter. To ensure redundancy along the path to the disks, multiple

controller cards and multipathing software is used.

Multipathing software

intelligently reroutes disk I/O across an alternate path when a

component failure invalidates one of the paths. To do this, multiple

disk controllers or HBA cards must be present and, ideally, connected to

the storage system via separate switches and cabling.

Microsoft provides

support for multipathing on the Windows Server platform (and therefore

SQL Server) via Microsoft Multipath I/O (MPIO) drivers. Using MPIO,

storage vendors provide reliable multipathing solutions for Windows

Server platforms. MPIO solutions are available for a variety of storage

systems, including Fibre and iSCSI SANs and parallel SCSI.

The real value in

multipathing software lies in the fact that when all disk paths are

working, the multipathing software increases disk performance by

balancing load across the available paths; thus, the solution services

both fault tolerance and performance at the same time.

Separate controllers

Transaction log

bottlenecks increase transaction duration, which has a flow-on effect

that causes numerous other performance problems. One way of preventing

this is to store the transaction log on dedicated, RAID-protected disks,

optionally connected to a dedicated disk controller channel or separate

controller card.

Using multiple

controller cards and multipathing software helps to increase disk

performance and therefore reduce the impact of the most common hardware

bottleneck. Another means of improving disk performance is through the

usage of storage cache.

3. Configuring storage cache

Battery-backed cache

Disk controller cache

improves performance for both reads and writes. When data is read from

the disk, if the requested data is stored in the controller cache, then

physical reads of the disk aren't required. In a similar fashion, when

data is written to disk, it can be written to cache and applied to disk

at a later point, thus increasing write performance.

The most critical aspect

of disk controller cache is that it must be battery backed. This will

ensure that power failures don't cause data in the cache to be lost.

Even if the server includes a UPS, which is recommended, disk controller

cache must be battery backed.

Read vs. write cache

It's important to

make the distinction between read cache and write cache. SQL Server

itself has a large cache stored in the server's RAM where, among other

things, it caches data read from disk. In most cases, the server's RAM

is likely to be much larger (and cheaper) than the disk controller

cache; therefore, disk read

performance increases attributed to storage cache are likely to be

quite small, and in some cases can actually be worse due to the double caching involved.

The real value of

disk controller cache is the write cache. Write cache is particularly

useful for improving disk performance during bursts of write activity

such as checkpoints ,

during which large numbers of writes are sent to disk. In these

circumstances, a large write cache can increase performance. The

controller commits the writes to cache, which is much faster than disk,

and hardens the

writes to disk at a later point. As long as the controller cache is

battery backed, this is a safe, high-performance technique.

Depending on the

controller card or SAN, you may be able to configure the percentage of

cache used for reads and writes. For SQL Server systems, reserving a

larger percentage of cache for writes is likely to result in better I/O

performance.

The quantity and

read/write ratio of storage cache can make a significant difference to

overall storage performance. One of the common methods of validating

different settings prior to deploying SQL Server is to use the SQLIO

tool, discussed next.

3.1.4. Validating disk storage performance and integrity

Before a system

is production ready, you must conduct a number of performance tests to

ensure the system will perform according to expectations. The primary

test is to load the system with the expected transaction profile and

measure the response times according to the service level agreements.

Prior to these

tests, you'll need to carry out several system-level tests. One of the

most important ones involves testing the storage system for capacity and

integrity. This section focuses on two important tools, SQLIO and

SQLIOSIM, both of which you can download for free from the Microsoft

website. Links to both of these tools are available at sqlCrunch.com/storage.

SQLIO

SQLIO is a tool used to

measure the I/O performance capacity of a storage system. Run from the

command line, SQLIO takes a number of parameters that are used to

generate I/O of a particular type. At the completion of the test, SQLIO

returns various capacity statistics, including I/Os per second (IOPS),

throughput MB/second, and latency: three key characteristics of a

storage system.

The real value in SQLIO

is using it prior to the installation of SQL Server to measure the

effectiveness of various storage configurations, such as stripe size,

RAID levels, and so forth. In addition to identifying the optimal

storage configuration, SQLIO often exposes various hardware and

driver/firmware-related issues, which are much easier to fix before SQL

Server is installed and in use. Further, the statistics returned by

SQLIO provide real meaning when describing storage performance; what is

perceived as slow can be put into context when comparing results between similar storage systems.

Despite the name, SQLIO

doesn't simulate SQL Server I/O patterns; that's the role of SQLIOSIM,

discussed in a moment. SQLIO is used purely to measure a system's I/O

capacity. As shown in table 1, SQLIO takes several parameters used in determining the type of I/O generated.

Table 1. Commonly used SQLIO parameters

| SQLIO option | Description |

|---|

| –t | Number of threads |

| –o | Number of outstanding I/O requests (queue depth) |

| –LS | Records disk latency information |

| –kR | Generates read activity |

| –kW | Generates write activity |

| –s | Duration of test in seconds |

| –b | I/O size in bytes |

| –frandom | Generates random I/O |

| –ssequential | Generates sequential I/O |

| –F | Config file containing test paths |

The configuration file specified with the –F

parameter option contains the file paths to be used by SQLIO for the

test. For example, let's say we wanted to test a LUN exposed to Windows

as T drive. The contents of the configuration file for this test would

look something like this:

T:\sqlio_test_file.dat 8 0x0 1000

The additional

parameters specified relate to the number of threads to use against the

file (8 in this example), a mask value, and the file size.

Before we look at an example, let's run through some general recommendations:

The file

size and test duration should be sufficient to exhaust the cache of the

storage system. Some systems, particularly SANs, have a very large

storage cache, so a short test duration with small file sizes is likely

to be fulfilled from the cache, obscuring the real I/O performance.

Tests

should be run multiple times, once for each file path. For instance, to

test the capacity of four LUNs, run four tests, once for each LUN

specified in the configuration file (using the -F

parameter). Once each file path has been tested individually, consider

additional tests with file path combinations specified in the

configuration file.

Ensure

the tests run for a reasonable length of time (at least 10–15 minutes)

and allow time between test runs to enable the storage system to return

to an idle state.

Record

the SQLIO results with each change made to the storage configuration.

This will enable the effectiveness of each change to be measured.

Run

tests with a variety of I/O types (sequential vs. random) and sizes.

For systems used predominately for OLTP purposes, random I/O should be

used for most tests, but sequential I/O testing is still important for

backups, table scans, and so forth. In contrast, sequential I/O testing

should form the main testing for OLAP systems.

If

possible, provide the results of the tests to the storage vendor for

validation. Alternatively, have the vendor present during the tests. As

the experts in their own products, they should be able to validate and

interpret the results and offer guidance on configuration settings

and/or driver and firmware versions that can be used to increase overall

performance.

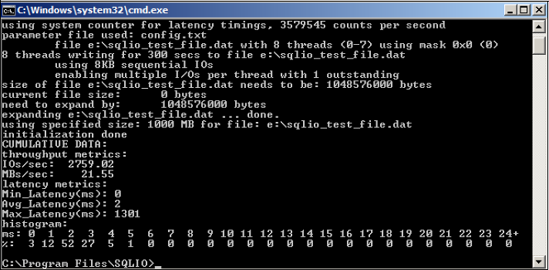

Let's look at an example of running SQLIO to simulate 8K sequential writes for 5 minutes:

sqlio -kW -t1 -s300 -o1 -fsequential -b8 -LS -Fconfig.txt

In this case,

the config.txt file contains a path specification to a 1GB file located

in e:\sqlio_test_file.dat. You can see the results of this test in figure 5.

As the results show,

we achieved about 2,759 IOPS and 21.55 MB/second throughput with low

average latency (2ms) but a high peak latency (1301ms). On their own,

these results don't mean a lot. In a real-world case, the tests would be

repeated several times for different I/O types and storage

configurations, ideally in the presence of the storage vendor, who would

assist in storage configuration and capacity validation.

Achieving good I/O

capacity, throughput, and latency is all well and good, but that's not

enough if the storage components don't honor the I/O requirements of SQL

Server. The SQLIOSIM tool, discussed next, can be used to verify the

integrity of the storage system and its suitability for SQL Server.

SQLIOSIM

Unlike SQLIO, SQLIOSIM

is a storage verification tool that issues disk reads and writes using

the same I/O patterns as SQL Server. SQLIOSIM uses checksums to verify

the integrity of the written data pages.

Most SQL Server

systems involve a large number of components in the I/O chain. The

operating system, I/O drivers, virus scanners, storage controllers, read

cache, write cache, switches, and various other items all pass data to

and from SQL Server. SQLIOSIM is used to validate that none of these

components alters the data in any adverse or unexpected way.

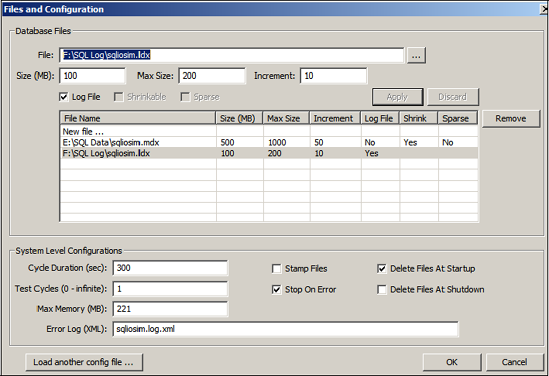

As you can see in figure 6,

SQLIOSIM can be configured with various file locations and sizes along

with test durations. The output and results of the tests are written to

an XML file, which you specify in the Error Log (XML) text box.

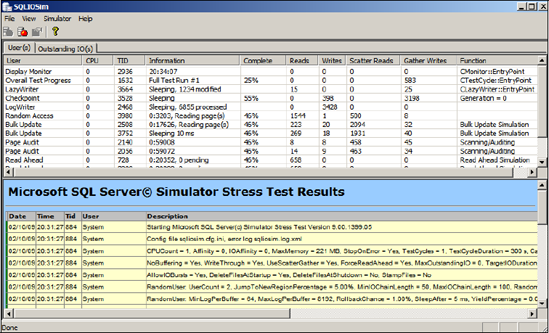

During execution, the test progress is displayed to the screen, as shown in figure 7, with the final results captured in the XML log file you specified.

SQLIOSIM ensures that the

SQL Server I/O patterns (covered in later chapters), such as random and

sequential reads and writes, backups, checkpoints, lazy writer, bulk

update, read ahead, and shrink/expand, all conform to SQL Server's I/O

requirements. Together with SQLIO, this tool provides peace of mind that

the storage system is both valid and will perform to expectations.

Let's turn our attention now from disk configuration to another major system component: CPU.