.5. Changing configuration and dynamically scaling your application

One of the golden promises

of cloud computing is the dynamic allocation of resources to your

service. It’s really cool that you can deploy a service from nothing to

20 servers, but you also want to be able to change that from 20 servers

to 30 servers if your service experiences a spike of some sort.

Changing the Service Configuration File

Your service is based on a service model that’s defined

in your service configuration file. This file defines how many instances

per role your service defines. You can change this file in one of three

ways.

The first way to change the

file is to edit it online in the portal. This is the simplest way to

change it, but it’s also the most primitive. You can’t wire this up into

an automated system or into an enterprise configuration management

system.

The second method can

use configuration files generated by your enterprise configuration

management change system. You can upload a new file (version them with

different file names so you can keep track of them) into BLOB storage,

and then point to that file when you change the configuration from the

portal.

The third option lets you

upload a new configuration through the service management REST API. If

you don’t want to use REST, you can use csmanage, like we’ve been doing in the past few sections.

The FC responds in different ways depending on how you’ve changed your configuration and how you’ve coded your RoleEnvironmentChanging event. By default, if you’ve

changed anything besides the instance count of a role, the FC tears

down and restarts any instances for the affected role. That part of the

service comes to a screeching halt while the instances are restarted.

The length of the outage isn’t very long, but it’s there. Be sure to

think about which changes should restart your roles and which shouldn’t;

you can adjust the code in the RoleEnvironmentChanging event accordingly.

If you increased the

instance count, none of the instances are affected. The FC creates new

instances, deploys your bits, configures them, and wires them into the

network and load balancer like it does when you’ve started the role

instances from scratch. This process can take several minutes. You won’t

see an immediate availability, so be patient. Take this slight delay

into consideration when you’re developing the logic you’ll use to detect

when you need to add instances.

If you’ve reduced the

number of instances, then the FC uses an undocumented algorithm to

decide which instances are shed. They’re unwired from the load balancer

so that it doesn’t receive any new traffic, and then they’re torn down.

After you’ve made changes to the configuration file, you have to deploy it.

Deploying the New Configuration File

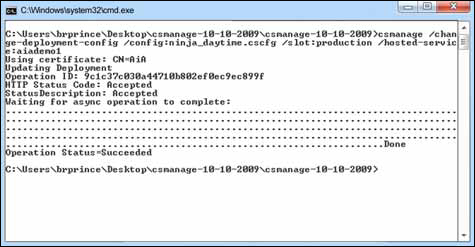

You can use csmanage

to deploy a new configuration file. The command should be fairly easy

to understand by now. The .cscfg file that will be uploaded by the

following command is in the same folder as csmanage. Your file probably isn’t in the same folder, so you’ll need to provide a path to the configuration file.

csmanage /change-deployment-config /config:ninja_daytime.cscfg /

slot:production /hosted-service:aiademo1

When you’re using the management API (and csmanage), you have to upload a locally stored configuration file. In figure 8, you can see that it took quite a while for the management service to spin up the new instance. The total time it took in this instance was about 4 minutes.

This might seem like a lot of work. Couldn’t Azure do all this for you automatically?

Why Doesn’t Azure Scale Automatically?

Many people wonder why

Azure doesn’t auto-scale for them. There are a couple of reasons, but

they all fall into the “My kid sent 100,000 text messages this month and

my cell phone bill is over a million dollars” category.

The first challenge for

Azure with auto-scaling your application is that Azure doesn’t know what

it means for your system to be busy. Is it the depth of the queue?

Which queue? Is it the number of hits on the site? Each system defines

the status of busy

different than any other system does. There are too many moving parts

for a vendor such as Microsoft to come up with a standard definition

that’ll make all its customers happy.

Another reason is that

Microsoft could be accused of too aggressively scaling up, and not

scaling down fast enough, just to increase the charges on your account.

We don’t think they would do that, but the second someone thinks the

algorithm isn’t tuned to their liking, Microsoft would get sued for

overbilling customers.

Another scenario this

approach protects against is a denial of service (DoS) attack. In these

attacks, someone tries to flood your server with an unusually high

number of requests. These requests overcome the processing power on your

servers and the whole system grinds to a halt. If Azure automatically

scaled up in this scenario, you would come in on Monday the day after an

attack and find 5,000 instances running in production. You would get

enough mileage points on your credit card to fly to the moon and back

for free, but you probably wouldn’t be too happy about it.

Microsoft has given us the

tools to manage scaling ourselves. We can adjust the target number of

instances at any time in a variety of ways. All we have to add is the

logic we want to use to determine what busy means for us, and how we want to handle both the busy states and the slow states.